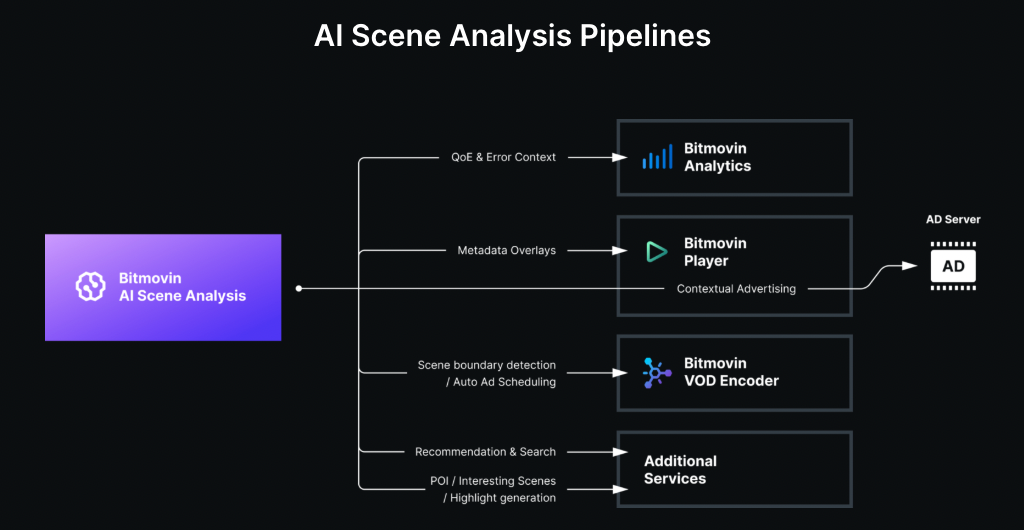

Bitmovin’s AI Scene Analysis is the latest addition to a suite of offerings that help streaming services maximize the value of their existing and upcoming content. AI has been influencing streaming for years, from powering recommendations to optimizing encoding, but its role is now expanding deeper into the video workflow. One of the most impactful developments is scene-level intelligence, where multimodal AI analysis detects scene boundaries, classifies content such as interviews or highlights, and generates metadata that is immediately usable across playback, ad targeting, and discovery. This enables platforms to automate thumbnail selection, personalize viewer experiences, and place ads more strategically. At the same time as reducing reliance on manual tagging, scene-level intelligence adds precision, speed, and scalability. When enabled within Bitmovin’s VOD Encoder, streaming platforms gain smarter monetization opportunities, improved recommendations, and more engaging user experiences, all without requiring teams to overhaul their existing pipelines. Bitmovin’s innovation in this space was recognized at NAB 2025, where AI Scene Analysis was awarded “Best of Show.”

In this blog, we will explore how AI is transforming the streaming pipeline, specifically using Bitmovin’s AI Scene Analysis, and highlight the key workflows it supports and what’s coming soon. We will cover how this supports broader goals around viewer experience, monetization, and operational efficiency.

Read our case study with STIRR, a Thinking Media company.

Why Scene-Level Metadata Matters for Streaming Workflows

OTT platforms of all sizes process huge libraries of content, yet the metadata behind that content is often asset-deep and can lack the detail needed to drive smarter workflows. Common data points like titles, genres, and overall descriptions provide only a surface-level understanding and do not reveal what is happening within the video. Without insight into where scenes change, what type of themes appear, or how the sentiment shifts, it becomes difficult to automate playback experiences, deliver personalized recommendations, or deliver ads effectively. Many teams still rely on manual tagging or rigid rules, which are time-consuming to maintain and hard to scale. Scene-level metadata addresses this gap by identifying scene transitions, labeling scene types with keywords, objects, and atmospheric information, and generating a rich dataset that reflects the true shape and rhythm of the content. It overcomes the limitations of asset-level metadata by surfacing scene-specific insights, making it easier to engage distinct viewer segments without breaking context.

AI Scene Analysis turns scene-level intelligence and understanding into a simple JSON file that can be accessed via an API or output to a cloud storage location. Developers can use the scene analysis to enrich existing content datasets and integrate them into content management systems or search and recommendation engines. AI Scene Analysis makes use of Bitmovin’s VOD Encoder’s pre-encode workflows, such as per-shot analysis, which already defines shot boundaries for Bitmovin’s existing Per-Title and upcoming Per-Shot encoding. As the analysis runs via the VOD encoding process, it not only benefits from existing and sophisticated pipelines but is also easily enabled in existing VOD workflows without requiring additional infrastructure. This makes it a scalable way to deliver game-changing metadata that can make content more discoverable, engaging, and easier to monetize.

Applying Scene Intelligence to Playback, Personalization, and Monetization

AI Scene Analysis analyzes each video to detect scene boundaries and classify scenes with structured metadata that enables automation across viewer experiences, discovery, and monetization.

AI Scene Analysis outputs a JSON object with all the metadata from an asset. Here are some examples:

- Identify natural scene transitions to power seamless and smarter ad breaks

- Generate scene-level keywords, mood tags, and IAB categories for contextual monetization

- Object, Character, and Location descriptions to provide in-depth context for scene-level search and discovery

Workflows currently enabled with Bitmovin’s AI Scene Analysis

Smarter Playback Experiences

Scene metadata helps developers create more intuitive and responsive playback features. When natural content transitions are mapped in available metadata, streaming platforms can use them to improve chaptering, thumbnails, or skip controls that match how viewers expect to interact with their content. This can avoid rigid, manual, or even time-based logic, and instead creates experiences that respond to what is happening on screen. Combined with Bitmovin’s Analytics, teams can also measure how viewers engage with these features and refine their playback strategies based on real usage patterns.

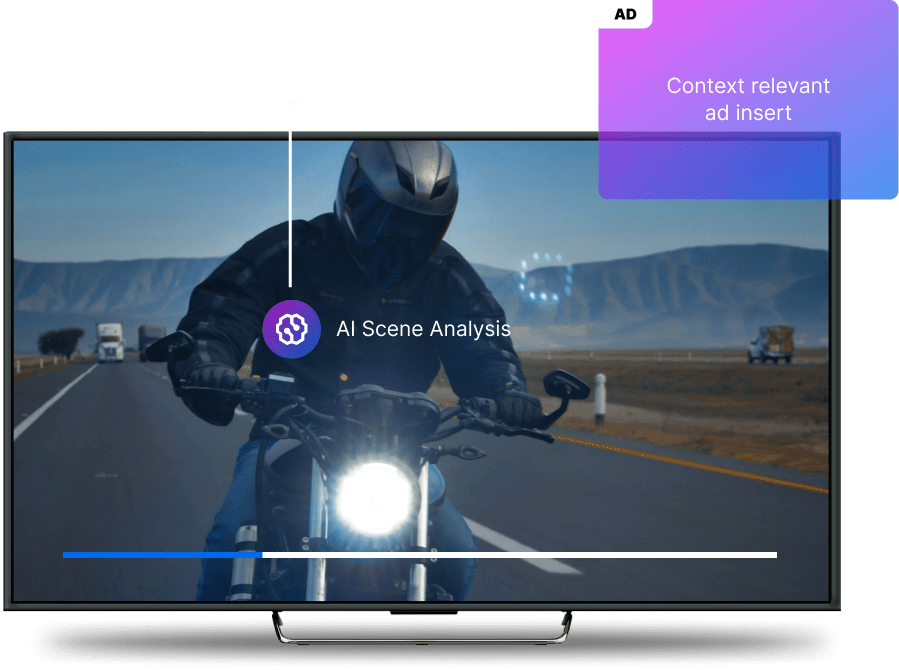

Context-Aware Ad Targeting

Ad experiences improve when they are tailored to the content being viewed. With scene-level metadata, platforms can insert ads at more natural moments in the content, such as after an important conversation or before a major sentimental shift. Bitmovin’s AI Scene Analysis provides automatic ad scheduling as part of the VOD Encoding workflow by placing SCTE markers at detected scene transitions based on customer business logic, helping platforms create a natural rhythm for ad insertion throughout their content. AI Scene Analysis also supports AI Contextual Advertising by outputting IAB taxonomy metadata for each scene, which ad servers can use to enrich ad decisions and keep viewers in the context of their content. When combined with the Bitmovin Player, which handles dynamic ad execution and playback logic, this creates a complete workflow for delivering more relevant and less disruptive ad experiences. Further, this scene-aware advertising can also help maintain brand safety by preventing certain ad topics from appearing after sensitive or inappropriate moments, like a tragic news story.

Improved Discovery and Content Operations

Scene-labeled video makes it easier for streaming services to search, recommend, and repurpose content. While viewers can find similar or relevant scenes based on keywords, objects, brands, or actors across a whole content library, editorial teams can automate the generation of highlight clips, personalised thumbnails, previews, and other short-form assets. In AVOD and FAST environments, these insights also help automate packaging and scheduling decisions based on real viewer interest, and can be generated at the same time as the assets are transcoded with Bitmovin’s VOD Encoder, without additional workflows or manual steps. Scene-level metadata also enhances content recommendation by allowing services to promote specific scenes that match user preferences, like car chases or a dance number, even within titles that wouldn’t traditionally rank on those topics.

Capabilities of What’s Coming Next

AI Scene Analysis currently supports contextual playback experiences, ad placement and targeting, and discovery, all from metadata generated during the VOD encoding process. This data is available via API and integrates directly with players, CMS platforms, search and recommendation engines, and ad servers. Looking ahead, Bitmovin is expanding its capabilities to unlock new workflows that provide further value to customers and viewers of different content types and experiences. These new workflows will support customers to focus on delivering key features, smarter experiences, and deeper personalization. The integration of AI Scene Analysis with Bitmovin VOD Encoding will continue to provide new benefits to users, going beyond the already industry-leading quality of video asset outputs. Planned capabilities currently in development include:

- Highlight the generation workflow for automated clip creation

- Localization support with multi-language output

- Auto-thumbnail generation for scenes and personalized asset images

- Tailored Sports and News content analysis and outputs

- Theme and Emotion tagging to detect tone for personalization or ad alignment

- Scene-level analysis for live streams, including auto ad placement and contextual advertising

- More partner integrations for ad and recommendation workflows

These upcoming workflows will follow the same approach as today’s implementation, offering low friction, API-ready metadata that connects seamlessly with Bitmovin’s infrastructure. As they are released, AI Scene Analysis will evolve into a foundational tool for automating decisions and enhancing content performance at scale.

Transforming Video Workflows with Scene-Level Intelligence

AI Scene Analysis is designed to work with the Bitmovin VOD Encoder to enrich on-demand workflows with scene-level context. It helps video platforms gain a deeper understanding of their content by automatically identifying scenes, classifying them, and delivering structured metadata that supports smarter decisions across playback, ad delivery, and discovery. These insights require no additional tooling or changes to infrastructure, making it easy for teams to integrate AI into their existing workflows.

To learn more about how scene-level metadata can optimize your video operations, see how STIRR is using it today and explore our dedicated product page.