State of VVC Video Codec

So it’s happening. After their previous work on h.264/AVC and h.265/HEVC, the ITU-T Video Coding Experts Group (VCEG) and ISO Moving Picture Experts Group (MPEG) have again joined forces to create another video codec named Versatile Video Coding (VVC).

The first goal for the VVC Video Codec was to significantly reduce bitrate expenditure while maintaining the same visual quality compared to HEVC. When considering PSNR as a quality metric, the reference VVC encoder outperforms the reference HEVC encoder by about 40% in BD-rate. However, some subjective tests were also performed which demonstrated overall bit-savings of closer to 50%. The second goal in the standardization was versatility. On this front, VVC facilitates coding and transport for a wide range of applications and content types such as conventional video streaming, optimizations for screen content, 360-degree video, as well as live and ultra-low delay applications.

Evolution or Revolution?

Since the development of VVC was started from the basis of HEVC, the first question to ask about VVC is: Is it an evolution based on the technologies that were used in the former coding standards, or is it a really new and revolutionary way of compressing video?

Answer: It’s more or less an evolution of the basic building blocks that were already used in HEVC and various other codecs before.

- It is still a hybrid, block-based video coding standard

- Most technologies are based on HEVC and are further refined and improved

- But there are also a lot of new coding tools which have not been seen in the context of video coding

But how was this achieved? Like other video coding standards (e.g. AVC, HEVC, or AV1), VVC is based on the hybrid block-based video coding approach with conventional intra and inter prediction. As with former evolutions of video coding standards, these gains can not be attributed to one single technique that was added in VVC but to smaller improvements in all the building blocks of the coding scheme. So here is a (not even close to complete) list of advancements in VVC:

- The maximum block size of Coding Tree Units (CTU) that can be processed was increased. The maximum block size is now 128×128 pixels. Also, the maximum block sizes for intra prediction and transformations were increased. This is particularly beneficial as the resolution of content that is encoded also is increasing.

- After the initial split, each CTU is further split into Coding Units. This splitting algorithm is now much more flexible and allows for more different block sizes both square and non-square.

- The number of directions that can be used in directional intra prediction was further increased. Intra prediction can now also be performed for non-square blocks.

- Many aspects of motion compensated prediction (inter prediction) were improved as well like better motion vector prediction, decoder side motion vector refinement, and overlapped block motion compensation (OBMC).

- More different types of transformation are available by using a separable transform combining Discrete Cosine and Sine Transform.

- The in-loop filters were improved as well with the addition of a new filter – Adaptive Loop Filter – for which the encoder can signal optimal parameters on a CTU basis.

As I already mentioned this list is far from complete and there are many many more new and adapted technologies that make VVC so efficient. If you want to learn more then there is plenty of material out there. But a good place to start is this very detailed overview paper.

What’s new in VVC?

So while the standard was finalized in late 2020, there is no widespread use of VVC in the market yet. But this was also not to be expected after such a short time. History has shown that adoption time for new video codecs is long and usually follows the following scheme: First, devices must support playback of a new standard. While software decoding on devices with high compute capabilities and no restrictions on power usage can be implemented quickly, most consumption of media is performed on devices that rely on hardware decoders. And while it takes some time to develop and deploy new decoding hardware, it takes even longer until these new devices reach a critical mass of deployment “in the wild”. At the same time, encoding solutions must be developed, tuned, and deployed. And finally, there is the question of royalties that must be paid. And only when all of these issues are resolved will it make sense to deploy actual video streaming using the new video codec.

For VVC, we are seeing the first practical implementations of VVC. There are some software-based encoder and decoder solutions and the first vendors have released hardware decoders in their System on a Chip (SoC) devices. Furthermore, some vendors (like Bitmovin) have deployed VVC Video Codec as an option in their transcoding as a service product. On the patent side, there are a few players moving the codec into production, with MPEG LA introducing the first license in early 2021. While some patent pools are forming it is still very much unknown how much the usage of VVC will cost. And in the face of the falling cost of bandwidth, this is the biggest problem of VVC. To put it simply: If the price is not worth the bitrate savings, it will not be a thing.

So as I mentioned, Bitmovin already deployed VVC video encoding in its cloud-based transcoding solution. For this, we teamed up with the Fraunhofer Heinrich-Hertz-Institut (HHI) to integrate their software-based encoder VVenC which is an open source VVC encoder and is freely available on Gihub. While this is working great, there was no easy way to create a VVC Video and watch it. This is where the vvDecPlayer project comes in.

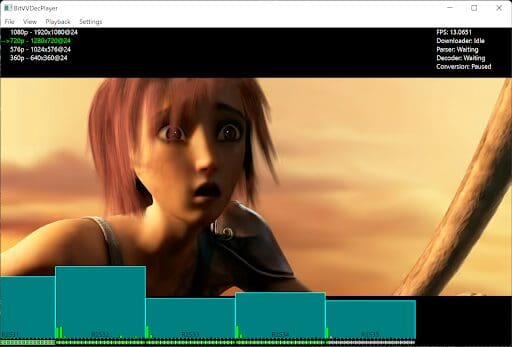

Introducing the VVC Video Player: BitvvDecPlayer

What I wanted to create was a simple demonstration player that was able to stream and decode a VVC video stream in real-time. All parts of the decoder are based on other open-source projects. Many of the rendering routines were copied from the YUView player (another project of mine) which in turn is using the Qt framework.

When opening a playlist in the vvDecPlayer, four threads are launched that build up the decoding pipeline:

- Download: This thread performs the download of the VVC video segments using HTTP. It has an internal buffer of 5 segments that it will try to keep full. The actual download was implemented using the Qt network module.

- Bitstream parsing: After the download is done, we perform parsing of the high-level syntax of the bitstream. This gives us information about the segment like resolution and more importantly the number of frames in the segment.

- Decode: The decode thread decodes the compressed bitstream into raw YUV frames. We are using the Fraunhofer VVdeC software decoder here. The decoder is quite fast and is able to do real-time decoding of UHD content, provided that enough CPU power is available. The decoded frames are all stored in temporary buffers.

- Conversion: While the decoded frames are in the YUV domain, we require RGB pixel data for display. This is done with a native C++ function that was copied from YUView.

Finally, there is a timer running in the main thread that is trying to update the screen ‘FPS’ times per second by drawing the next converted RGB pixel buffer to the screen.

The screenshot shows the player in action. On the top left, the available renditions are shown. The currently selected rendition is marked with an arrow and the currently visible rendition is highlighted in green. Use the up/down arrows to switch renditions. On the top right are the fps counter and the status of the threads. The thread status display can be enabled in the menu or by pressing Ctrl+D. On the bottom is the progress graph (Ctrl+P). This is showing many values from the decoder pipeline. The dark cyan blocks indicate the compressed data of each segment where the height corresponds to the bitrate of each segment. Within each block, there is one bar that indicates the bitrate per frame. On the bottom, the status of each frame is shown: Downloaded (grey), Decoded (blue), converted to RGB, and ready for display (green). Playback can also be paused using the space bar and playback can be switched to full-screen view by double-clicking the video.

If you want to give it a try check out the project on Github. The only prerequisites you need are a compiler, CMake, and the Qt libraries. How to build really depends on the platform that you are compiling for. But it goes something like this:

- Get Qt. Either from the Qt page or if you are on Linux then probably your distro’s package manager can install it for you. On the mac homebrew is a good option.

- Check out the source code of the player, and create a new directory to build in (e.g. ‘build’). Go into the directory and call qmake: ‘qmake ../’. After that, you start compilation. On Linux/mac this is probably ‘make’, while on windows it is likely ‘nmake’.

- Next, you also need the VVdeC decoder library. Compiling that is also easy. Get the sources from the Github repo and create a build directory. In there, call ‘cmake -DBUILD_SHARED_LIBS=1 ..’ and then ‘cmake -–build . –config Release’. This should build the shared decoder library in the ‘bin’ or the ‘lib’ folder.

- Lastly, you can start the player. Go to ‘Settings->Select VVdeC library’ and browse to the shared VVdeC library you just built.

Now everything should be ready to stream some videos. The player comes with some sample stream provided by us and our encoder. Just select a sample from ‘File->Bitmovin Streams’. If you encounter a bug please feel free to open a bug report on Github. To learn more about the BitvvDecPlayer, view our Webinar with Fraunhoffer HHI.

Conclusion

There is no doubt that VVC has some exciting potential and is already showing interesting results. Two years is a long time, and there is a lot of opportunities to further increase the coding performance and lower the encoding complexity, as well as perfect some of the new tools that are already adopted into the new standard. It will be exciting to check in again in 6 to 12 months to see how the implementations are developing.

If you like to learn more about how the VVC Video Codec works check out our introductory blog: What is VVC and how does it work

Open projects like these are a staple of the benefits of working as an Engineer for Bitmovin. Come join our team to work on your exciting standard-setting projects.

Video technology guides and articles

- Back to Basics: Guide to the HTML5 Video Tag

- What is a VoD Platform?A comprehensive guide to Video on Demand (VOD)

- Video Technology [2022]: Top 5 video technology trends

- HEVC vs VP9: Modern codecs comparison

- What is the AV1 Codec?

- Video Compression: Encoding Definition and Adaptive Bitrate

- What is adaptive bitrate streaming

- MP4 vs MKV: Battle of the Video Formats

- AVOD vs SVOD; the “fall” of SVOD and Rise of AVOD & TVOD (Video Tech Trends)

- MPEG-DASH (Dynamic Adaptive Streaming over HTTP)

- Container Formats: The 4 most common container formats and why they matter to you.

- Quality of Experience (QoE) in Video Technology [2022 Guide]