This is a complete guide to Container File formats in 2022, created by some of the world’s leading video engineers and experts here at Bitmovin.

We’ve created this guide to give you everything you need to know about container files, from basic terminology to advanced deep-dives into different container file types.

You can go ahead and read through the whole guide or just jump straight to the chapter you’re interested in.

Let’s get started!

Chapters:

1. What is a Media Container?

2. Container File Terminology

3. Container Formats in OTT Media Delivery

4. Handling Container Formats in the Player

5. MP4 Container Formats

6. CMAF Container Formats

7. MPEG Transport Stream (MPEG-TS) Container Formats

8. Matroska (Webm) Container Formats

9. Container Format Resources

Container File Formats: A Note About Terminology

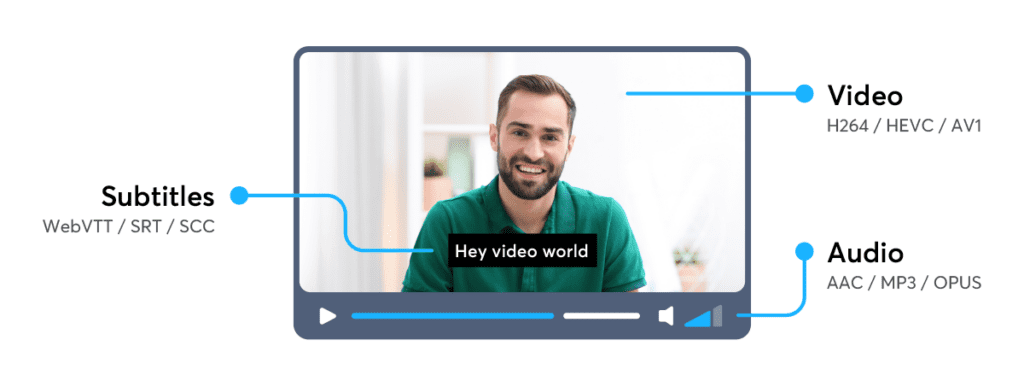

A codec is used to store a media signal in binary form.

Most codecs compress the raw media signal in a lossy way.

The most common media signals are video, audio and subtitles. A movie consists of different media signals. Most movies have audio and subtitles in addition to moving pictures.

Examples for video codecs are: H.264, HEVC, VP9 and AV1. For audio there are: AAC, MP3 or Opus. There are many different codecs for each media signal.

A single media signal is also often called Elementary Stream or just Stream. People usually mean the same thing when they refer to the Video Stream as Codec, Media or H.264 Stream.

1. What is a Media Container?

Container File Format = meta file format specification describing how different multimedia data elements (streams) and metadata coexist in files.

A container file format provides the following:

- Stream Encapsulation

One or more media streams can exist in one single file. - Timing/Synchronization

The container adds data on how the different streams in the file can be used together. E.g. The correct timestamps for synchronizing lip-movement in a video stream with speech found in the audio stream. - Seeking

The container provides information to which point in time a movie can be jumped to. E.g. The viewer wants to watch only a part of a whole movie. - Metadata

There are many flavours of metadata. It is possible to add them to a movie using a container format. E.g. the language of an audio stream.

Sometimes subtitles are also considered as metadata.

Common container file formats are MP4, MPEG2-TS and Matroska, and can represent different video and audio codecs.

Each container file format has its strengths and weaknesses. These properties can be in regards to compatibility, streaming and size overhead.

2. Container File Terminology

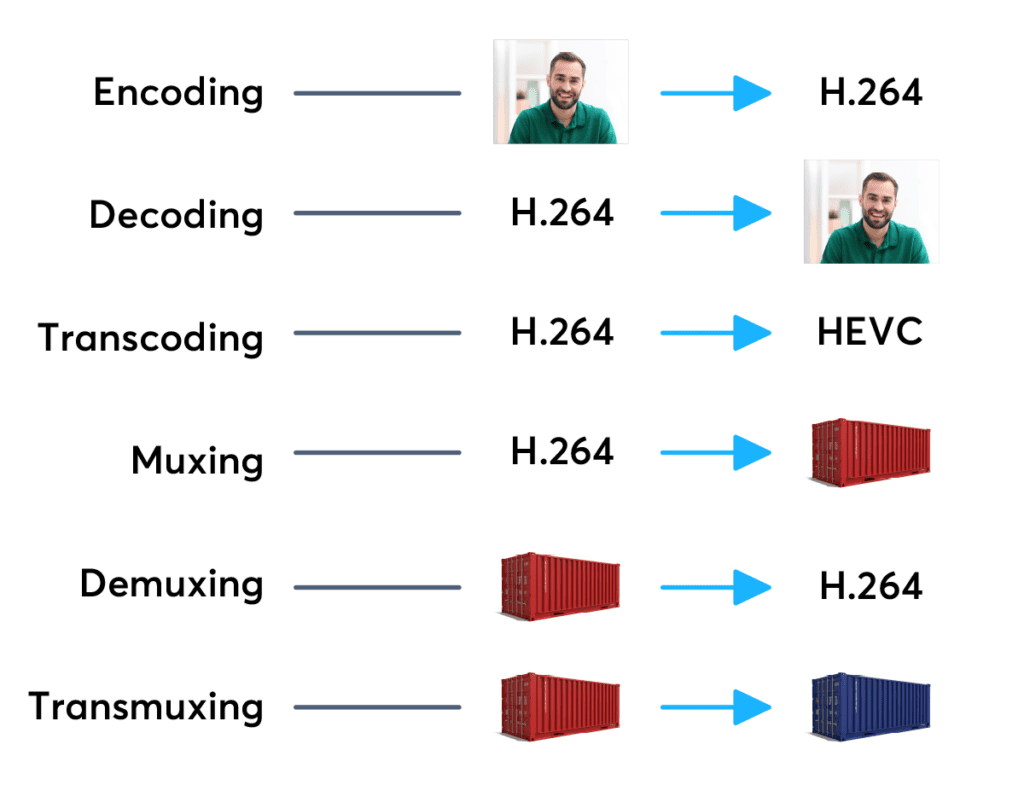

Encoding

The process of converting a raw media signal to a binary file of a codec.

For example encoding a series of raw images to the video codec H.264.

Encoding can also refer to the process of converting a very high quality raw video file into a mezzanine format for simpler sharing & transmission.

For example, taking an uncompressed RGB 16-bit frame , with a size of 12.4MB, for 60 seconds (measured at 24 frames/sec) totalling 17.9GB – and compressing it into 8-bit frames with a size of 3.11MB per frame.

The same video of 60 seconds at 24fps is now 2.9GB in total.

Effectively compressing the size of the video file down by 15GB!

Decoding

The opposite of encoding; decoding is the process of converting binary files back into raw media signals. Ex: H.264 codec streams into viewable images.

Transcoding

The process of converting one codec to another (or the same) codec.

Both decoding & encoding are necessary steps to achieving a successful transcode.

Best described as: decoding the source codec stream and then encoding it again to a new target codec stream. Although encoding is typically lossy, additional techniques like frame interpolation and upscaling increase the quality of the conversion of a compressed video format.

Muxing

The process of adding one or more codec streams into a container format.

Demuxing

Extracting a codec stream from a container file format.

Transmuxing

Extracting streams from one container format and putting them in a different (or the same) container format.

Multiplexing

The process of interweaving audio and video into one data stream. Ex: An elementary stream (audio & video) from the encoder are turned into Packetized Elementary Streams (PES) and then converted into Transport Streams (TS).

Demultiplexing

The reverse operation of multiplexing. This means extracting an elementary stream from a media container file. E.g.: Extracting the mp3 audio data from an mp4 music video.

In-Band Events

This refers to metadata events that are associated with a specific timestamp. This usually means that these events are synchronized with video and audio streams. E.g.: These events can be used to trigger dynamic content replacement (ad-insertion) or the presentation of supplemental content.

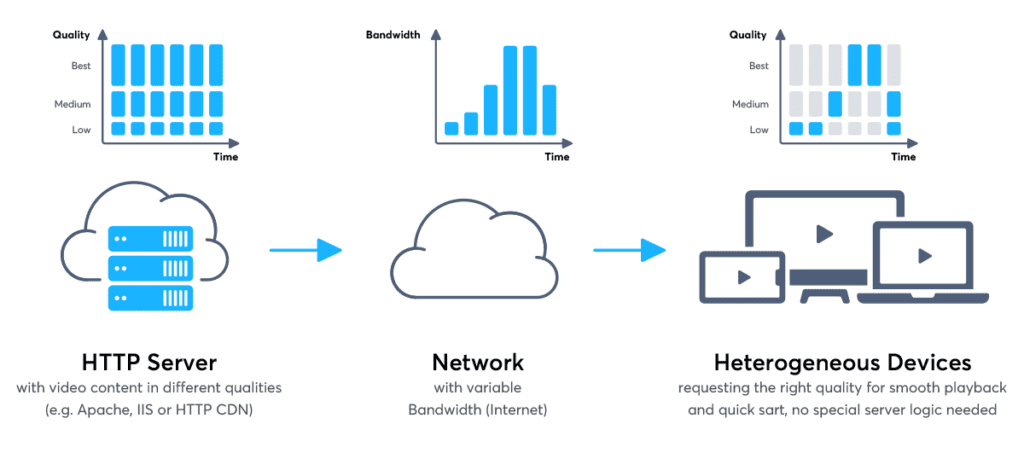

3. Container Formats in OTT Media Delivery

Container files are pretty much present anywhere where there’s digital media. For example, if you record a video using your smartphone the captured audio and video are both stored in one container file, e.g. an MP4 file.

Another example of container files in the wild is media streaming over the internet. From end to end the main entity of media data that is handled are containers.

At the end of content generation the packager multiplexes encoded media data into containers, which are then transported over the network as requested by the client device on the other end.

There the container files are demuxed, the content is decoded and finally presented to the end user.

4. Handling Container Formats in the Player

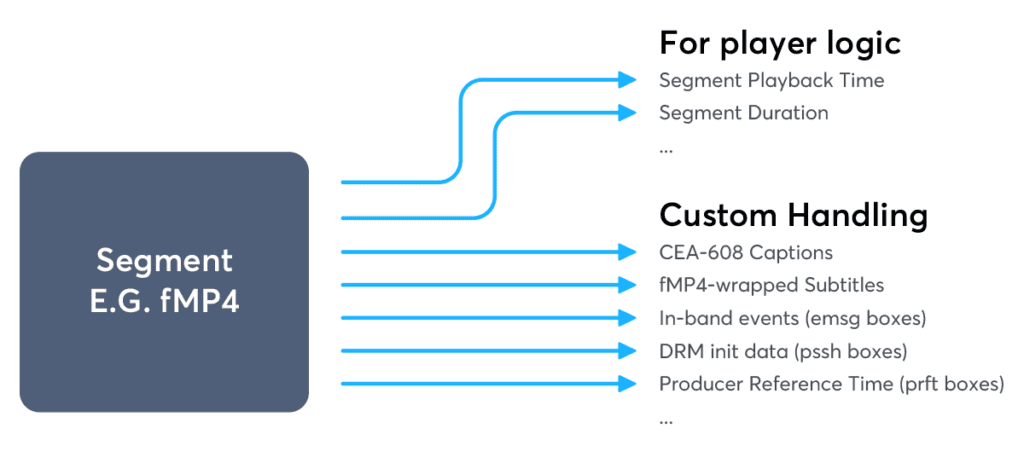

Metadata Extraction

At the client-side the player needs to extract some basic info about the media from the container, for example, the segment’s playback time, duration and codecs.

Additionally, there is often metadata present in the container that most browsers would not extract or handle out-of-the box.

This requires the player implementation to have desired handling in place.

Some examples are CEA-608/708 captions, inband events (emsg boxes of fMP4), etc. where the player has to parse the relevant data from the media container format, keep track of a timeline and further process the data at the correct time (like displaying the right captions at the right time).

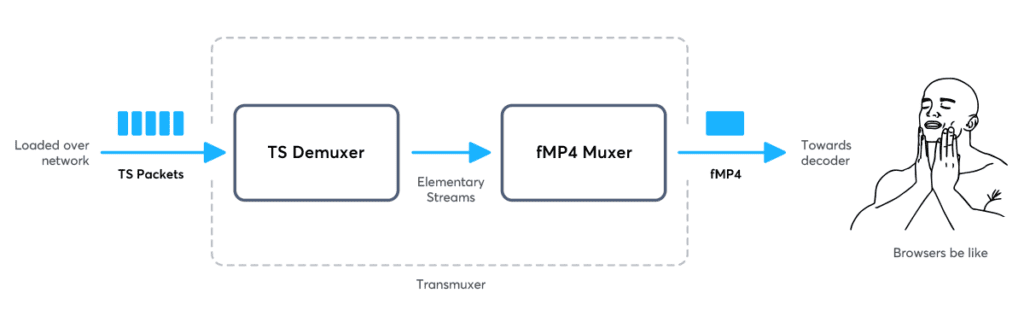

Client-side Transmuxing

Browsers often lack support for certain container formats. One prime example where this becomes a problem is Chrome, Firefox, Edge and IE not (properly) supporting the MPEG-TS container format.

The MPEG (Motion Picture Experts Group, a working group formed out of ISO and IEC) Transport Stream format was specifically designed for

Digital Video Broadcasting (DVB) applications.

With MPEG-TS still being a commonly used format the only solution is to convert the media from MPEG-TS to a container file format that these browsers do support (i.e. fMP4).

This conversion step can be done at the client right before forwarding the content to the browser’s media stack for demuxing and decoding. It basically includes demultiplexing the MPEG-TS and then re-multiplexing the elementary streams to fMP4.

This process is often referred to as transmuxing.

5. MP4 Container Formats

Overview of Standards

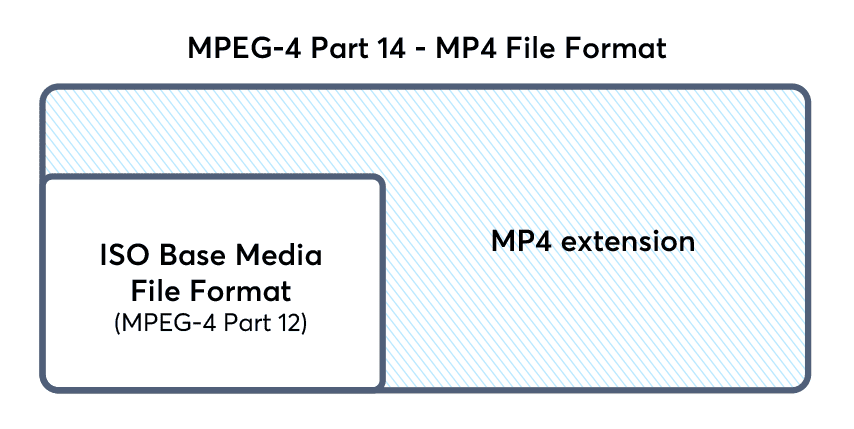

MPEG-4 Part 14 (MP4) is one of the most commonly used container formats and often has a .mp4 file ending. It is used for Dynamic Adaptive Streaming over HTTP (DASH) and can also be used for Apple’s HLS streaming.

MP4 is based on the ISO Base Media File Format (MPEG-4 Part 12), which is based on the QuickTime File Format.

MPEG stands for Moving Pictures Experts Group and is a cooperation of the International Organization for Standardization (ISO) and the International Electrotechnical Commission (IEC). MPEG was formed to set standards for audio and video compression and transmission. MPEG-

4 specifies the Coding of audio-visual objects.

MP4 supports a wide range of codecs. The most commonly used video codecs are H.264 and HEVC. AAC is the most commonly used audio codec. AAC is the successor of the famous MP3 audio codec.

ISO Base Media File Format

ISO Base Media File Format (ISOBMFF, MPEG-4 Part 12) is the base of the MP4 container format. ISOBMFF is a standard that defines time-based multimedia files.

Time-base multimedia usually refers to audio and video, often delivered as a steady stream. It is designed to be flexible and easy to extend. It enables interchangeability, management, editing and presentability of multimedia data.

The base component of ISOBMFF are boxes, which are also called atoms. The standard defines the boxes, by using classes and an object oriented approach.

Using inheritance all boxes extend a base class Box and can be made specific in their purpose by adding new class properties.

The base class:

Example FileTypeBox:

The FileTypeBox is used to identify the purpose and usage of an ISOBMFF file. It is often at the beginning of a file.

A box can also have children and form a tree of boxes. For example the MovieBox (moov) can have multiple TrackBoxes (trak). A track in the context of ISOBMFF is a single media stream. E.g. a MovieBox contains a trak box for video and one track box for audio.

The binary codec data can be stored in a Media Data Box (mdat). A track usually references its binary codec data.

Fragmented MP4 (fMP4)

Using MP4 it is also possible to split a movie into multiple fragments. This has the advantage that for using DASH or HLS a player software only needs to download the fragments the viewer wants to watch.

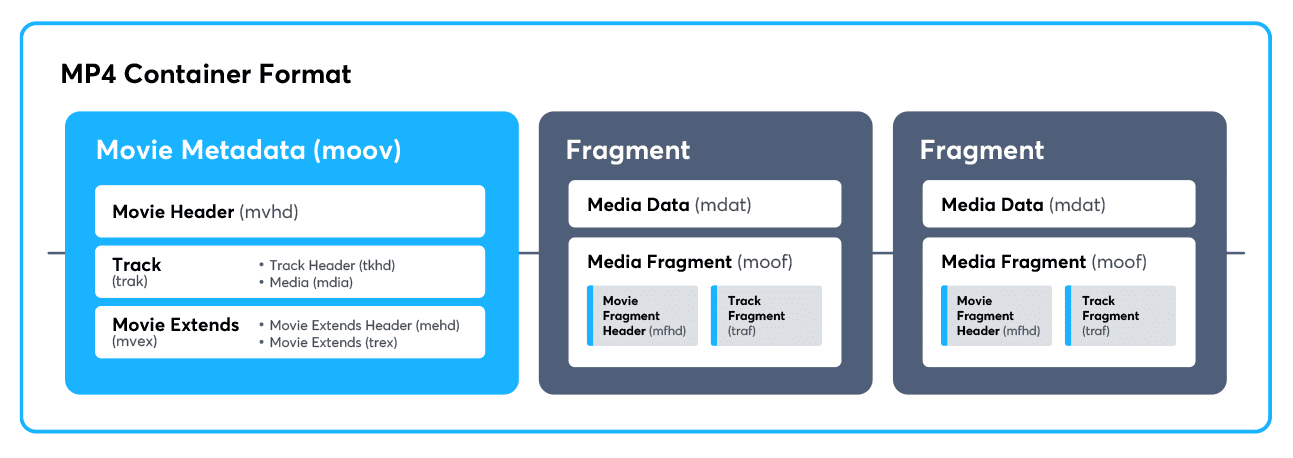

A fragmented MP4 file consists of the usual MovieBox with the TrackBoxes to signal which media streams are available. A Movie Extends Box (mvex) is used to signal that the movie is continued in the fragments.

Another advantage is that fragments can be stored in different files. A fragment consists of a Movie Fragment Box (moof), which is very similar to a Movie Box (moov).

It contains the information about the media streams contained in one single fragment. E.g. it contains the timestamp information for the 10 seconds of video, which are stored in the fragment.

Each fragment has its own Media Data (mdat) box.

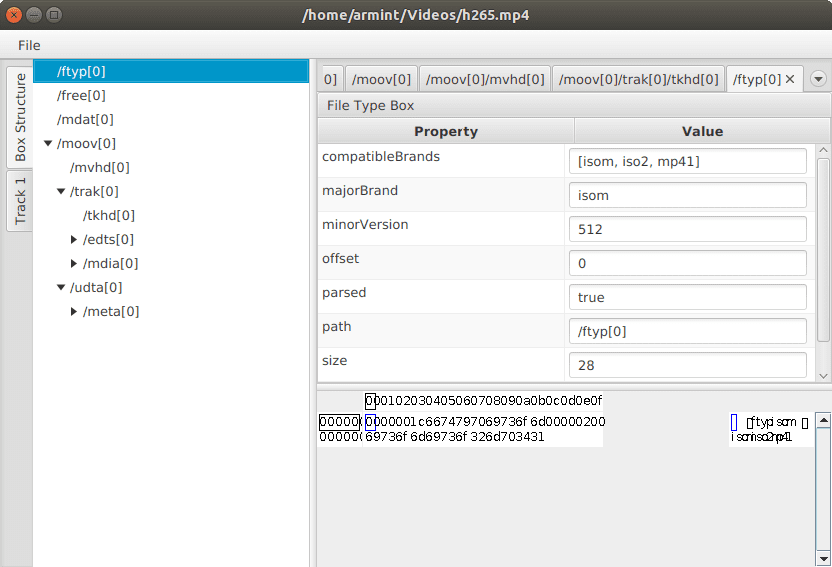

Debugging (f)MP4 files

Viewing the boxes (atoms) of an (f)MP4 file is often necessary to discover bugs and other unwanted configurations of specific boxes. To get a summary of what a media file contains the best tools are:

- MediaInfo (https://mediaarea.net/en/MediaInfo/Download)

- ffprobe, which is part of the ffmpeg binaries (https://ffbinaries.com/downloads)

These tools will however not show you the box structure of an (f)MP4 file. For this you could use the following tools:

- Boxdumper (https://github.com/l-smash/l-smash)

- IsoViewer (https://github.com/sannies/isoviewer)

- MP4Box.js (http://download.tsi.telecom-paristech.fr/gpac/mp4box.js/filereader.html)

- Mp4dump (https://www.bento4.com/)

(Screenshot of isoviewer)

6. CMAF Container Formats

MPEG-CMAF (Common Media Application Format)

Serving every platform as a content distributor can prove to be challenging as some platforms only support certain container formats.

To distribute a certain piece of content it can be necessary to produce and serve copies of the content in different container formats, e.g. MPEG-

TS and fMP4. Clearly, this causes additional costs in infrastructure for content creation as well as storage costs for hosting multiple copies of the same content. On top of that, it also makes CDN caching less efficient.

MPEG-CMAF aims to solve these problems, not by creating yet another container format, but by converging to a single already existing container file format for OTT media delivery.

CMAF is closely related to fMP4 which should make the transition from fMP4 to CMAF to be of very low effort. Further, with Apple being involved in CMAF, the necessity of having content muxed in MPEG-TS to serve Apple devices should hopefully be a thing of the past and CMAF can be used everywhere.

With MPEG-CMAF, there are also improvements in the interoperability of DRM (Digital Rights Management) solutions by the use of MPEG-CENC (Common Encryption). It is theoretically possible to encrypt the content once and still use it with all the different state-of-the-art DRM systems.

However, there is no encryption scheme standardized and, unfortunately, there are still competing ones, for instance Widevine and PlayReady. Those are not compatible to each other, but the DRM industry is slowly moving to converge to one, the Common Encryption format.

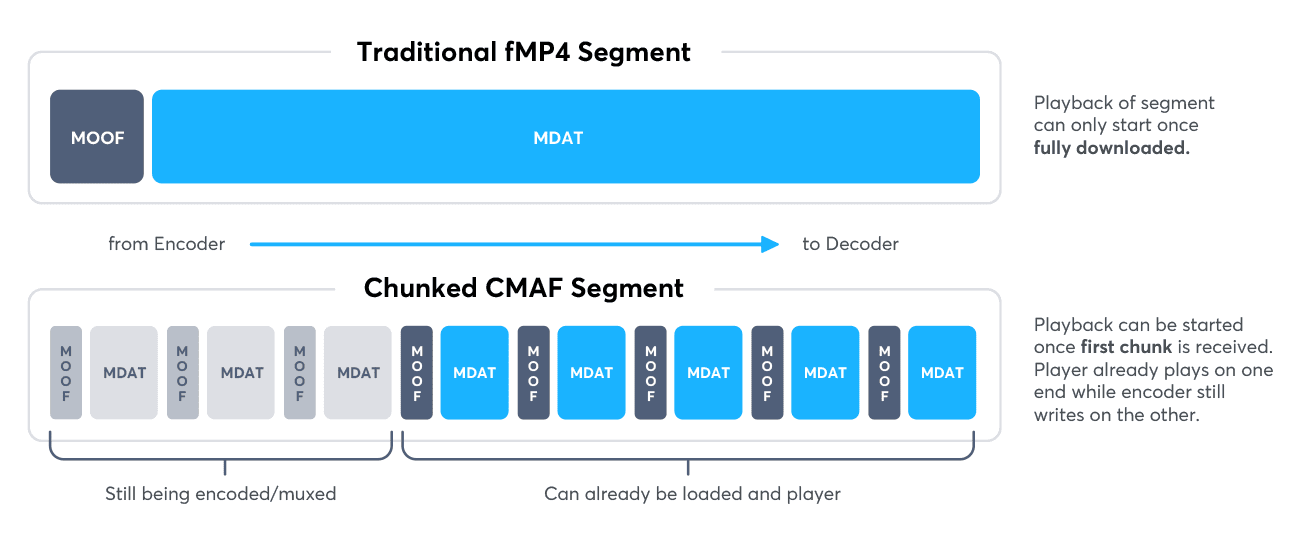

Chunked CMAF

One interesting feature of MPEG-CMAF is the possibility to encode segments in so-called CMAF chunks. Such chunked encoding of the content in combination with delivering the media files using HTTP chunked transfer encoding enables lower latencies in live streaming uses cases than before.

In traditional fMP4 the whole segment had to be fully downloaded until it could be played out. With chunked encoding, any completely loaded chunks of the segment can already be decoded and played out while still loading the rest of the segment.

Hereby, the achievable live latency is no longer depending on the segment duration as the muxed chunks of an incomplete segment can already be loaded and played out at the client.

7. MPEG Transport Stream (MPEG-TS) Container Formats

MPEG Transport Stream was standardized in MPEG-2 Part 1 and specifically designed for Digital Video Broadcasting (DVB) applications.

Compared to its counterpart, the MPEG Program Stream, which was aimed for storing media and found its use in applications like DVD, the MPEG Transport stream is a more transport-oriented format.

An MPEG Transport Stream consists of small individual packets which should be a measure to achieve resilience against and minimize implications of corruption or loss of such.

Furthermore, the format includes the use of Forward Error Correction (FEC) techniques to allow correction of transmission errors at the receiver.

The format was clearly designed for the use on lossy transport channels.

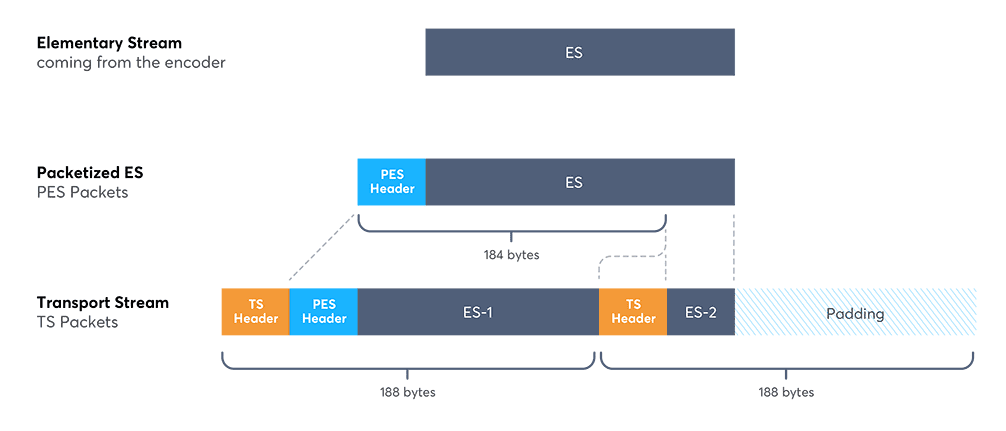

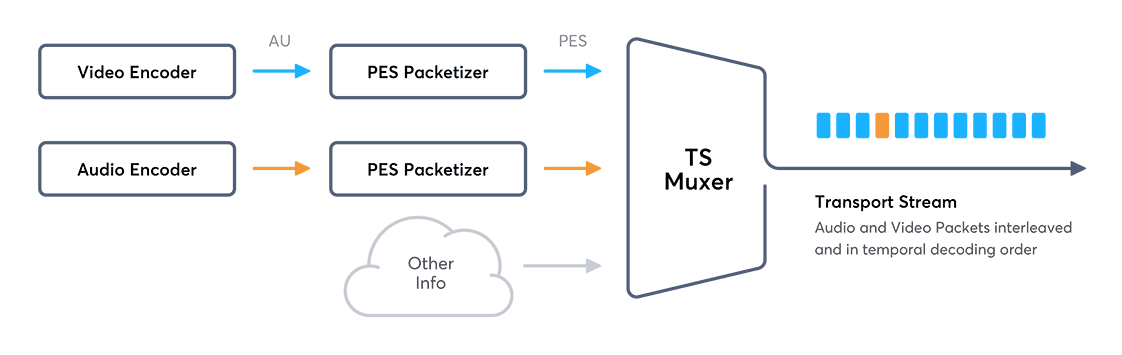

Muxing: ES → PES → TS

The elementary stream coming from the encoder is first turned into a packetized elementary stream (PES).

The thereby added PES header, includes a stream identifier, the PES packet length, and information about media timestamps, among other things.

In the next step, the PES is split up into 184-byte chunks and turned into the Transport Stream (TS) by adding a 4-byte header to each chunk.

The resulting TS consists of packets with a fixed length of 188 bytes. Each TS packet’s header carries the same PID (Packet Identifier) which associates the packet with the elementary stream it originated from.

Muxing multiple Elementary Streams

One elementary stream represents either audio or video content. Given a video elementary stream, we would usually also have at least one audio elementary stream.

These related ES would then be muxed into the same transport stream with there being separate PIDs for the different ES and their packets.

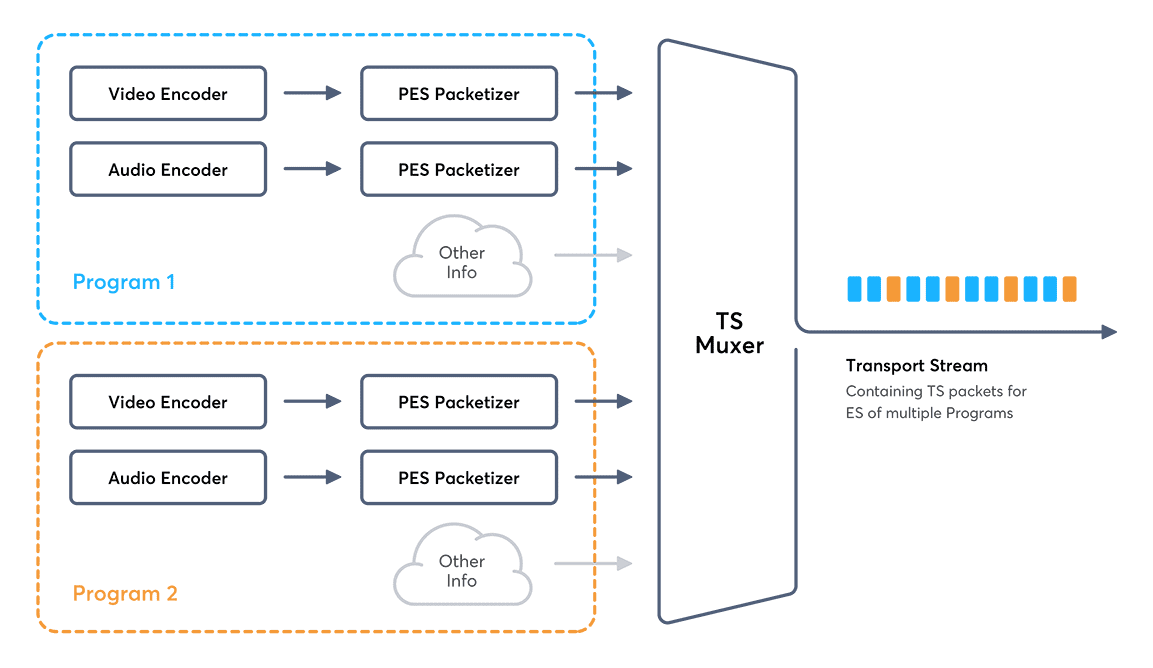

Muxing multiple Programs

MPEG-TS has the notion of programs. A program is basically a set of related elementary streams that belong together, e.g. video and the matching audio.

A single transport stream can carry multiple programs with each being, for example, a different TV channel.

Program Association

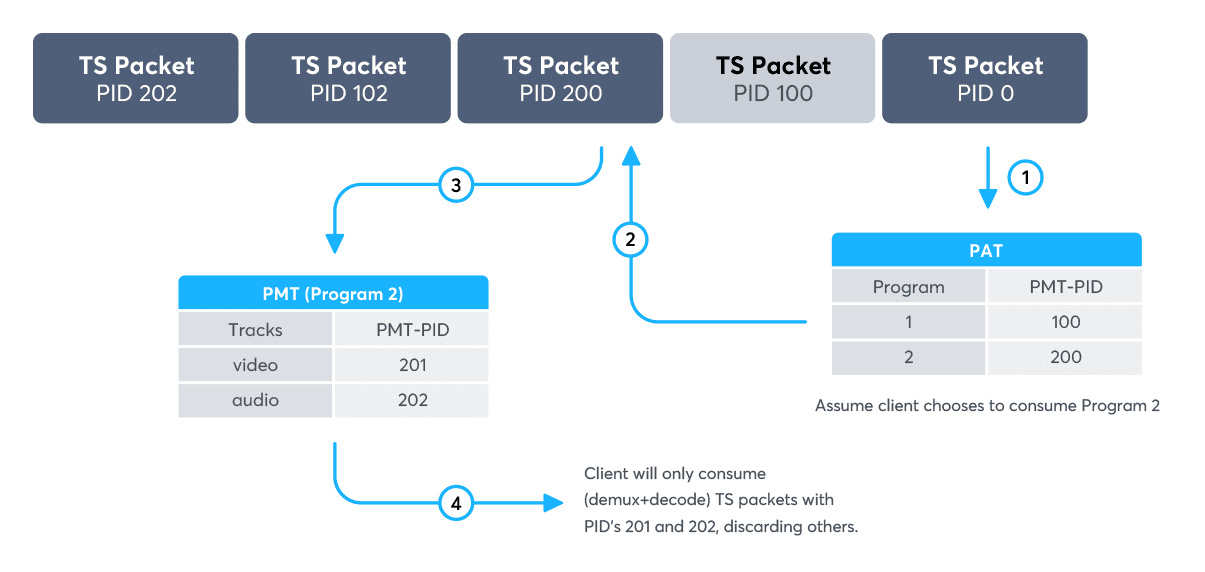

From a low-level perspective a transport stream is just a sequence of 188 byte long TS packets.

As previously mentioned, there can be many programs with each having multiple elementary streams, but a client usually is only able to present one program at a time.

Therefore it must somehow know which packets to consume and which to discard upon receiving the transport stream. For this purpose there are two kinds of special packets:

- Program Association Table (PAT)

PAT packets have the reserved PID of 0 and contains the PIDs for the Program Map tables of all programs of the transport stream.

- Program Map Table (PMT)

The PMT represents a single program and contains the PIDs for all elementary streams of the program

- Inspect the TS Packet with PID 0, which contains the PAT

- Find the PMT-PID of the Program the player should play back in the PAT (in this example: 200)

- Get the TS Packet with the relevant PMT-PID, which contains the PMT (in this example: PID 200)

- The PMT contains the PID for all the media tracks, which are part of the Program to play

A client receiving the transport stream would first read the first PAT packet it receives and pick the program to be presented depending on the user’s selection.

From the PAT it would get the selected program’s PMT which provides the program’s elementary streams and their PIDs.

Now the client would just filter for these PIDs, each representing a separate ES of the chosen program, and consume them, i.e. demux, decode and present them to the user.

OTT-specific aspects and Conclusion

The previous explanations were very broadcast-oriented, in OTT, however, there are special considerations to be made.

OTT clients have network connections that are unstable and do not have a guaranteed bandwidth which requires that only content that will be presented should be loaded.

Given that a client is only able to present a single program at a time, having multiple programs in the same transport stream and loading them would be a waste of bandwidth that could be better used otherwise, e.g. for quality adaptation.

So for OTT we would never have multiple programs in one transport stream. For multi-audio content similar arguments apply regarding multiplexing elementary streams, i.e. all of them should be multiplexed into their own transport stream.

MPEG-TS is still widely used for OTT, especially when targeting the Apple ecosystem.

A downside of MPEG-TS is that due to the small packet size and all the packet headers the [overhead is higher](https://www.slideshare.net/bitmovin/bitmovin-segmentlength-50056628/11) compared to fMP4.

MPEG-TS is transport-oriented and includes considerations for lossy communication channels which is not exactly a good fit for HTTP-based media delivery where transport loss is already handled by the network stack.

Debugging and Inspecting MPEG-TS

- http://www.digitalekabeltelevisie.nl/dvb_inspector/ (GUI, open source)

- http://thumb.co.il/ (GUI/Web, open source)

- http://dvbsnoop.sourceforge.net/ (CLI, open source)

- https://github.com/tsduck/tsduck (CLI, open source)

8. Matroska (Webm) Container Formats

Matroska is a free and open-standard container format. It is based on the Extensible Binary Meta Language (EBML), which is basically XML in binary form.

This fact makes the standard easy to extend. It is possible to virtually support any codec.

WebM

WebM is based on the Matroska container format.

The development was mainly driven by Google to have a free and open alternative to MP4 and MPEG2-TS to be used on the web.

It was also developed to support Google’s open and free codecs like: VP8, VP9 for video and Opus and Vorbis for audio.

It is also possible to use WebM with DASH to stream VP9 and Opus over the web.

Debugging Matroska/Webm

The best tool to debug and view the contents of a Matroska or WebM file is mkvinfo (https://mkvtoolnix.download/). Thanks for following our Fun with Container Formats series! To learn more about container formats and handling join us at our Monthly Demo and Technical Q&A!

9. Container Format Resources:

Video technology guides and articles

- Back to Basics: Guide to the HTML5 Video Tag

- What is a VoD Platform? A comprehensive guide to Video on Demand (VOD)

- Video Technology [2023]: Top 5 video technology trends

- HEVC vs VP9: Modern codecs comparison

- What is the AV1 Codec?

- Video Compression: Encoding Definition and Adaptive Bitrate

- What is adaptive bitrate streaming

- MP4 vs MKV: Battle of the Video Formats

- AVOD vs SVOD; the “fall” of SVOD and Rise of AVOD & TVOD (Video Tech Trends)

- MPEG-DASH (Dynamic Adaptive Streaming over HTTP)

- Quality of Experience (QoE) in Video Technology [2023 Guide]