In this blog post we discuss items from the 115th MPEG meeting that are specifically relevant for adaptive streaming, including SAND, Server Push and Websocket, CMAF and more…

The 115th MPEG meeting was held in Geneva, Switzerland and its press release highlights the following aspects:

- MPEG issues Genomic Information Compression and Storage joint Call for Proposals in conjunction with ISO/TC 276/WG 5

- Plug-in free decoding of 3D objects within Web browsers

- MPEG-H 3D Audio AMD 3 reaches FDAM status

- Common Media Application Format for Dynamic Adaptive Streaming Applications

- 4th edition of AVC/HEVC file format

The actual press release can be found here.

What’s in this Post?

In this blog post I want to cover some other topics discussed in the meeting. These topics are specifically relevant for adaptive media streaming, namely:

- recent developments in MPEG-DASH,

- (what’s up with this common media application format (CMAF),

- MPEG-VR (virtual reality), and

- the MPEG roadmap/vision for the future.

MPEG-DASH Server and Network Assisted DASH (SAND): ISO/IEC 23009-5

Part 5 of MPEG-DASH, referred to as SAND – server and network-assisted DASH – has reached FDIS. This work item started sometime ago at a public MPEG workshop during the 105th MPEG meeting in Vienna. The goal of this part of MPEG-DASH is to enhance the delivery of DASH content by introducing messages between DASH clients and network elements or between various network elements for the purpose of improving the efficiency of streaming sessions by providing information about real-time operational characteristics of networks, servers, proxies, caches, CDNs as well as DASH client’s performance and status.

This specification defines the following:

(a) The SAND architecture which identifies the SAND network elements and the nature of SAND messages exchanged among them,

(b) the semantics of SAND messages exchanged between the network elements present in the SAND architecture,

(c) a recommended encoding scheme for the SAND messages, and

(d) the minimum to implement SAND message delivery protocol.

The way that this information is to be utilized is deliberately not defined within the standard and left open for (industry) competition (or other standards developing organizations). In any case, there’s plenty of room for research activities around the topic of SAND.

MPEG-DASH with Server Push and WebSockets: ISO/IEC 23009-6

Part 6 of MPEG-DASH reached DIS stage and deals with server push and Web sockets, i.e., it specifies the carriage of MPEG-DASH media presentations over full duplex HTTP-compatible protocols, particularly HTTP/2 and WebSocket. The specification comes with a set of generic definitions for which bindings are defined allowing its usage in various formats. Currently, the specification supports HTTP/2 and WebSocket.

For the former it is required to define the push policy as an HTTP header extension whereas the latter requires the definition of a DASH subprotocol. Luckily, these are the preferred extension mechanisms for both HTTP/2 and WebSocket and, thus, interoperability is provided. The question of whether or not the industry will adopt these extensions cannot be answered right now but I would recommend keeping an eye on this, and there are certainly multiple research topics worth exploring in the future.

To conclude the recent MPEG-DASH developments, the DASH-IF recently established the Excellence in DASH Award at ACM MMSys’16 and the winners are presented here (including some of the recent developments described in this blog post).

Common Media Application Format (CMAF): ISO/IEC 23000-19

The goal of CMAF is to enable application consortia to reference a single MPEG specification (i.e., a “common media format”) that would allow a single media encoding to use across many applications and devices. Therefore, CMAF defines the encoding and packaging of segmented media objects for delivery and decoding on end user devices in adaptive multimedia presentations. This sounds very familiar and reminds us a bit on what the DASH-IF is doing with their interoperability points. One of the goals of CMAF is to integrate HLS in MPEG-DASH which is backed up with this WWDC video where Apple announces the support of fragmented MP4 in HLS. The streaming of this announcement is only available in Safari and through the WWDC app but Bitmovin has shown that it also works on Mac iOS 10 and above, and for PC users all recent browser versions including Edge, FireFox, Chrome, and (of course) Safari.

MPEG Virtual Reality

Virtual reality is becoming a hot topic across the industry (and also academia) which also reaches standards developing organizations like MPEG. Therefore, MPEG established an ad-hoc group (with an email reflector) to develop a roadmap required for MPEG-VR. Others have also started working on this, such as DVB, DASH-IF, and QUALINET (and maybe many others: W3C, 3GPP). In any case, it shows that there’s a massive interest in this topic and Bitmovin has shown already what can be done in this area within today’s Web environments. Obviously, adaptive streaming is an important aspect for VR applications including many research questions to be addressed in the (near) future. A first step towards a concrete solution is the Omnidirectional Media Application Format (OMAF) which is currently at working draft stage (details to be provided in a future blog post).

MPEG roadmap/vision

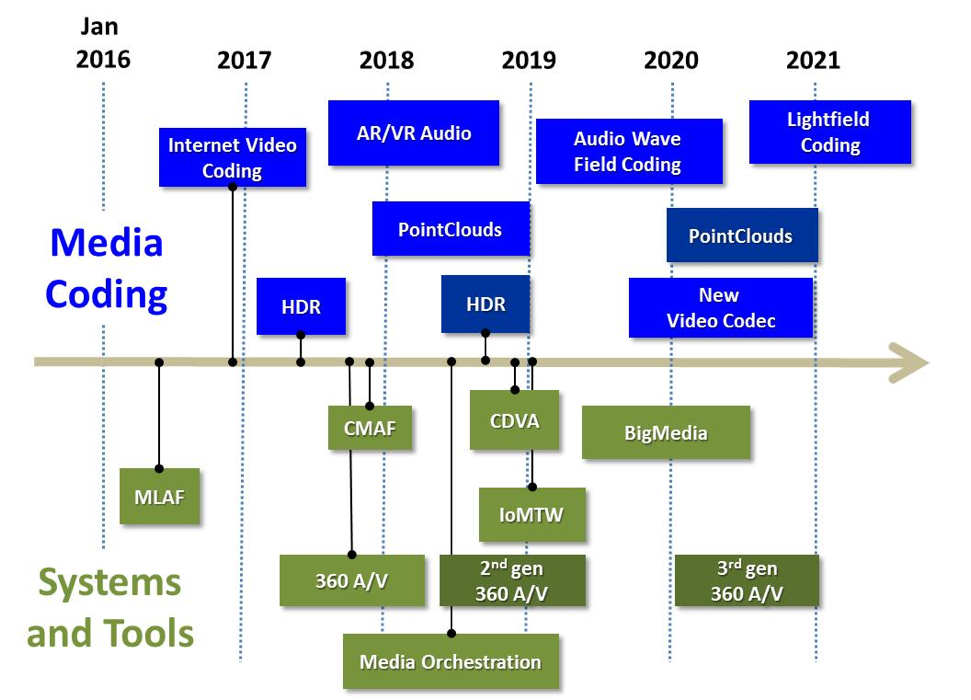

At it’s 115th meeting, MPEG published a document that lays out its medium-term strategic standardization roadmap. The goal of this document is to collect feedback from anyone in professional and B2B industries dealing with media, specifically but not limited to broadcasting, content and service provision, media equipment manufacturing, and telecommunication industry. The roadmap is depicted below and further described in the document available here. Please note that “360 AV” in the figure below also refers to VR but unfortunately it’s not (yet) reflected in the figure. However, it points out the aspects to be addressed by MPEG in the future which would be relevant for both industry and academia.

The next MPEG meeting will be held in Chengdu, October 17-21, 2016. Feel free to contact us for any questions or comments.