With the increase in demand for premium content, you’re being pushed to deliver content faster, with increasingly crisp picture quality. To keep up, video industry leaders have to explore ways to take advantage of the luminance, contrast, and color gamut that HDR offers.

You’re probably familiar with HDR (high dynamic range) technology. Its brightness, color accuracy, and contrast, especially compared to standard dynamic range (SDR), means that HDR is now common in displays from computers to TVs. Put simply, these displays can read and show images built from a wider gamut of color and brightness.

4K might be the current standard for display technology, but not all 4K is created equal, and different standards of HDR encoding produce disparate results. In this guide, we’ll briefly define HDR, talk about the differences between video and photo HDR, and finally discuss the three most common standards used by the industry to encode HDR content:

- HDR10

- HDR10+

- Dolby Vision

Ready? Buckle up!

What is HDR?

To begin, let’s understand what HDR actually is. Each pixel in a display is assigned a certain level value of color and brightness. SDR limits that to a specific range—for example, very dark shades of gray are just displayed as black and brighter grays are shown as whites.

With HDR, this limitation is broken as the display panels are capable of producing darker blacks and brighter whites. HDR also increases the number of steps between each value of gray, resulting in more shades of gray.

Differences Between Video HDR and Photo HDR

It’s important to know that there’s a difference between how the term is used and applied for videos and photos.

In photography, HDR refers to your camera trying to behave as your eyes do. Your irises can expand and contract as light levels change, something that cameras could not imitate until HDR came to the rescue. High-end cameras utilize HDR by combining several photos taken during a burst. Each photo is taken at different exposures (ie, steps), with the amount of light doubled from one to the next. These images will then be combined into one stunningly vivid image.

If that’s not genius, I don’t know what is.

Unlike photo HDR, video HDR is just one single exposure. There are no fancy tricks with the camera merging multiple images together. Instead, the technology takes advantage of new HDR-compatible displays that make the bright parts of the images really bright, while keeping the dark parts dark. This so-called _contrast ratio_ is supposed to be greater on HDR-capable televisions as compared to SDR sets. It makes your TV brighter only in areas on screen that need it.

Defining Contrast and Color

Contrast is the difference between dark and bright. Color is a separate value-based on absolute RED, GREEN, and BLUE levels regardless of the video format. Color is produced by how we perceive light, so a greater range of light means we’ll perceive a greater range of color.

HDR provides a huge improvement to the range of both contrast and color, meaning that the brightest parts of the image can be made brighter than normal resulting in the image appearing to have more depth. The expanded color range means that RED, GREEN, and BLUE primary colors, as well as everything in between, are brighter and much more dynamic (especially between blue and green).

In other words, HDR offers great illumination with difficult light. Shoots with light background and dark foreground (where a display’s contrast ratio comes in) are feasible without much effort and equipment.

Contrast ratios can range from 1 to 21 and are commonly written as 1:1 to 21:1. This ratio can be calculated using the formula:

(L1 + 0.05) / (L2 + 0.05)

- L1 is the relative luminance of the lighter colors.

- L2 is the relative luminance of the darker colors.

For a better understanding of this topic, check out a definition of relative luminance.

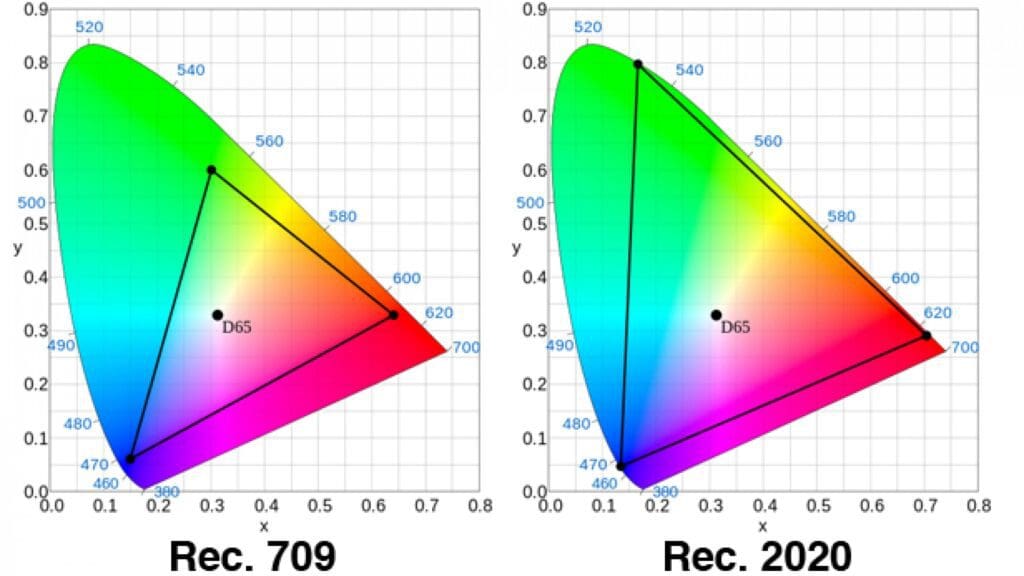

The range of colors that a specific display is capable of showing in an RGB system is known as its color gamut. Put another way, it’s the colors that can be created within the boundaries of three specific RGB color primaries.

Rec.709 is a color gamut that’s been in use for TV since 1990. By contrast, the new ITU-R Rec.2020 color gamut has much wider primaries than any previous color space, allowing for more saturated colors.

HDR Standards

HDR is currently very confusing, with different encoding standards requiring different kinds of hardware and video content. This means that a single standard can’t fit every use case. As mentioned earlier, the three most widely embraced standards are HDR10, HDR10+, and Dolby Vision.

HDR10

HDR10 is a standard that was advanced by the UHD Alliance and adheres to the following conventions:

- Uses the ST2084 Perception Quantization (PQ) curve

- Allows maximum luminance of 1000 nits

- Uses the REC.2020 color space

- Has 10bpc (bits per channel) encoding

- Is open standard

- Uses static metadata

How Does HDR10 Handle Contrast Metadata?

HDR10 works by providing static metadata to the display. Metadata can be pictured as the information alongside the signal that tells your display how much of the PQ container will be used.

Static metadata is consistent across all displays. The maximum peak and average brightness values are calculated across the entire video instead of a specific scene or frame. This means that it informs your display as to what the brightest and darkest points are in the video, thereby setting a single brightness level for the entire video.

Advantages of HDR10 for Content Distribution

- HDR10 is the most popular HDR standard, thanks to being open standard.

- It has some of the cheapest HDR TV sets, expanding the net of affordability and availability.

- It doesn’t cost TV manufacturers and streaming services providers any royalty to have it in their products because it’s open source.

- It is the most common and widely used format for content.

HDR10+

HDR10+ offers better and improved picture quality than HDR10. The HDR10 industry standard provides a single luminance guide value that has to apply across the entire video. HDR10+, on the other hand, allows content creators to add an extra layer of data to add updated luminance on a scene-by-scene or even frame-by-frame basis. This helps displays deliver an impressive HDR picture experience.

Advantages of HDR10+

- Incorporates dynamic metadata, which provides separate information for each scene.

- The tone-mapping process is efficient due to dynamic metadata.

- Allows luminance value of up to 4000 nits.

- Allows resolutions of up to 8K.

- Delivers HDR quality at a cheaper rate as compared to Dolby Vision.

- Is open standard. Though most of it was developed by Samsung, any brand can use it or modify it.

- Offers more freedom to manufacturers as it runs away from the locked-in approach offered by Dolby Vision. This gives brands the freedom to bring their own strengths and processes into play.

Dolby Vision

Dolby Vision offers the highest quality but is the most demanding standard currently in the market.

- Allows for luminance values of up to 10,000 nits

- Incorporates dynamic metadata

- Uses 12bpc encoding

- Is a proprietary standard, so you have to pay a license fee to create content in it or incorporate the technology into a device to use it.

How Does Dolby Vision Manage Contrast Metadata?

Dolby Vision incorporates dynamic metadata, which adjusts to the capabilities of your display instead of dealing with absolute values based on how the video was mastered. Dynamic metadata adjusts brightness levels based on each scene or frame, preserving more detail between scenes that are either very bright or very dark.

Advantages of Dolby Vision

- Capable of replicating real-life scenes/images. Sunrises, for example, look more realistic than other HDR standards.

- Most closely expresses the creator’s intention.

- Adjusts the tone color on a scene-by-scene basis.

- Produces brighter and more detailed dark scenes.

The Requirements of Common HDR Streaming Services

There’s no shortage of places to find streaming HDR content, though not every company offers the same standards.

- Amazon Prime: Supports 4K UHD. You’ll need a minimum bandwidth of 25 Mbps.

- Netflix: Supports Dolby Vision and HDR10. You’ll need a minimum bandwidth of 25 Mbps.

- iTunes: Supports HDR and Dolby Vision. You’ll need a minimum bandwidth of 25 Mbps.

- YouTube: Supports HDR. You’ll need a minimum bandwidth of 15 Mbps.

- Google Play: Supports 4K UHD. You’ll need a minimum bandwidth of 15 Mbps.

- Vudu: Supports Dolby Vision and HDR10. You will need a minimum bandwidth of 11 Mbps.

Conclusion

If you’ve made it this far, you’ve covered a lot of ground; you’ve refined your knowledge of HDR, you understand the differences between photography HDR and video HDR, and you’re familiar with the different HDR standards and their advantages. I’ve also shown you where to head to in case you want to stream this content.

Quality video is key to engagement with customers who want to stream video, so it’s important to stay up to date about how to produce content that’s HDR-enabled. Consumer HDR TVs are becoming more and more common, and most smartphones being produced today are capable of displaying HDR content.

Keeping up with advancements in video technology can be daunting, and HDR is nothing short of complex. Consider partnering with Bitmovin—they provide video infrastructure for digital media companies around the globe and have offered support for Dolby Vision (and all other HDR standards) since 2020.