Part I – Updating Library Dependencies from x86 to ARM

ARM CPU architectures have been in the news a lot recently:

- Apple announced that they are going to use ARM processors and ditch Intel.

- Cloud computing platforms such as AWS are offering instances with ARM.

It seems like there is a significant shift in the tech industry towards ARM. So is the hype around it justified? If so, how can you port your product to ARM-based machines? As a part of my internship with Bitmovin, I was tasked with updating our CPU architecture from x86 to ARM. This blog series is intended to define ARM as well as how I implemented the architecture within Bitmovin’s Encoding product.

ARM vs Other CPU Architectures

Before diving into the “how” we migrated from x86 to ARM, I want to clarify some terminology and explain what ARM offers and how it differentiates from the other CPU architectures. If you just wish to see my journey porting our encoding services to ARM, skip this part.

Currently, x86_64 (sometimes called AMD64) is more or less the industry standard for (on-demand) cloud computing platforms, such as AWS, and is used in most desktop computers. This CPU architecture originates from the late 1970s when 25 MHz was blazing fast, and was consistently updated and extended with new features, such as SSE4, and remains very prominent to this day. Without getting too much into the fundamentals of the architecture, it is important to know that x86_64 is a Complex Instruction Set Computer (CISC) architecture, meaning that a processor takes a single instruction and does the task. However, this can take more than one clock cycle and depends on the complexity of the instruction.

By contrast, ARM, is a Reduced Instruction Set Computer (RISC) architecture, that only has a small and highly-optimized set of instructions, which take far fewer clock cycles per instruction. Thus making ARM processors far more energy-efficient and cost-effective.

As there are also older versions of ARM which only support 32-bit, this blog series only refers to the 64-bit (aarch64) version of ARM. Below is a quick table that shows the different terminologies used:

| CPU architecture | 32-bit name(s) | 64-bit name(s) |

| x86 | i386 | x86_64, x64 or AMD64 |

| ARM | AArch32, arm/v6, arm/v7 | AArch64, Arm64(/v8) |

Why care about ARM?

So now you might wonder why somebody, who is focusing on cloud computing should care about ARM. It is not like these computers run out of battery, like a phone or a laptop, but you still have to pay for the electricity bill or in the case of AWS, the cost of an instance. Instances with ARM CPUs (which uses Amazon’s own Graviton processors) only incur 80% of the costs of a comparable Intel x86-CPU. So if there is a lot of money to be saved, why not switch to those instances and call it a day? Because ARM uses a reduced and different instruction set compared to x86, thus, every single program needs to be compiled for ARM and this is exactly where my journey from x86 to ARM begins.

What’s the goal?

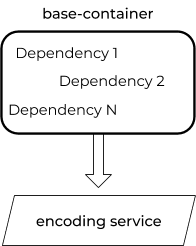

The goal of my project was to port Bitmovin’s main encoding service to ARM. If everything was written in a platform-independent programming language, such as Java, there wouldn’t be so much to do. However, in our case, the encoding service is a highly optimized C++ project, which depends on several other C/C++ dependencies. So every dependency needs to be compiled individually for ARM. The encoding service is built in a Docker container housing for every required dependency. So the first step was to build the following base-container for ARM.

The journey of migrating from x86 to ARM

To begin the migration of our CPU architecture from x86 to ARM, I started up an AWS ARM instance and tried to build the project, without the hassle of using the correct cross-compiling flags and other optimizations. The first few libraries looked promising, as they compiled successfully without any intervention. However, the build eventually stopped and an error message appeared. The initial ARM build was comprised of multiple libraries that were grouped into four categories: distribution packages, python packages, binaries, and custom/open-source dependencies.

1. Distribution packages

The distribution packages included basic dependencies like CMake, git, Python, make, etc, which are needed to compile the other libraries. If your distribution supports ARM, there is a pretty good chance that most packages are already precompiled and you simply need to install them. In my case, Bitmovin’s distribution build was Ubuntu 18.04 and there were very few adjustments necessary, as the package manager apt automatically installed the required packages for aarch64.

2. Python packages

Even though no problems typically occur with python packages, there are some packages such as matplotlib, that are precompiled for x86, but not for ARM. This means that pip would automatically install a precompiled version for x86, but would need to compile the library for ARM before it can be used. Depending on the number of python packages, this can drastically increase build-time. Another way to fix the potential recompilation would be to install precompiled packages from the distribution. However, in our case it was an outdated version of matplotlib, so we could not use it.

3. Binaries

In some build cases, the only libraries available are simple binaries without any source code. This is most often the case when using proprietary technologies or libraries that depend on platform-specific features. As x86 is currently the industry standard for server hardware and ARM is just starting to gain popularity, nearly all binaries are only compiled for x86 and can’t be used on ARM natively. It is possible to emulate x86 instructions on ARM with QEMU, but this approach is awfully slow and would delay the whole encoding process. This is why those dependencies were excluded, at least until native libraries are available or another solution is found.

4. Custom/Open source dependencies

Now to the fun part. All of the other libraries and builds either work with minor adjustments or not at all. Although there isn’t much that can be changed, when the source code is available, there are ways to make your build work. There are excellent build examples that have been optimized for many different CPU architectures. Others require a newer version, which are (better) optimized for ARM, but some (older) ones require manual intervention.

Some of the custom/open-source dependency libraries only required a compiler flag to specify whether the CPU was using little or big-endian, whereas others had automatically specified the -Werror flag. This means that for example, if a warning for an unused variable is triggered, the compiler flags the warning as an error, and stops the build. However, the problem is that the CMake files in some libraries check if the flag is already set. If the flag is not already set then unfortunately, there is no easy way to disable it. This in itself wouldn’t be a problem if there aren’t any warnings for x86, but applies when a warning occurs for ARM and causes the build to stop. In our case, this particular issue flew t under the radar, as the current CI/CD system only builds on x86, resulting in a warning flag that stopped the ARM build. To resolve the issue, we removed the code part that adds the flag.

Another library checks the size of pthread_mutex_t, which is used to protect shared data structures from concurrent modifications and create an object of equal size. It’s important to note that pthread_mutex_t is 40 bytes on x86 and 48 bytes on ARM. Therefore, the size of the object must be changed when compiling dependencies for AArch64.

A final library variant used an inline assembler to apply specific CPU features, however, the inline assembler (__asm) isn’t supported on ARM, and even if it was, the assembler code would need to be rewritten for ARM. Some of the dependencies within a library check for the CPUID, which is used to determine the features supported by the CPU. Although this should be supported by ARM, it failed none-the-less as the library was expecting the x86 instruction set.

It always depends on the library, but my experience shows how certain things are prone to break on different platforms. Compilers take a lot of information away when compiling for new platforms and there are different macros to check for the CPU architecture. So always keep in mind which platforms the code should run and if possible, use code that works on every platform. In cases where code is platform-specific use macros and implement it for a wider range.

Compiling everything from x86 to ARM

After working through all dependencies and setting up the environment, it was finally possible to start to compile our main encoding service. If all binaries would be available for ARM, then the compiling of our project would have worked without changing anything. Some features of our encoding service require the usage of specific binaries. For some of them, there was no ARM alternative available. Therefore this feature needed to be disabled for the ARM build.

CMake was used to control the compilation process, architectural checks were implemented and when ARM was detected, specific flags were set to disable features that weren’t natively available within the architecture. To summarize, I went through the whole project and every time a library was used that wasn’t available, I would allow the code to fall back to something that is available. It is important to note, that the dependencies wouldn’t always be disabled, only in scenarios when those flags were set. This way it was possible to maintain a central file where specific libraries can be disabled, without going through the whole source code again to undo any changes. So if a binary for ARM becomes available, we can easily remove the flag without changing everything back.

I’ll continue with our x86 to ARM journey with two follow up posts:

Video technology guides and articles

- Back to Basics: Guide to the HTML5 Video Tag

- What is a VoD Platform?A comprehensive guide to Video on Demand (VOD)

- Video Technology [2022]: Top 5 video technology trends

- HEVC vs VP9: Modern codecs comparison

- What is the AV1 Codec?

- Video Compression: Encoding Definition and Adaptive Bitrate

- What is adaptive bitrate streaming

- MP4 vs MKV: Battle of the Video Formats

- AVOD vs SVOD; the “fall” of SVOD and Rise of AVOD & TVOD (Video Tech Trends)

- MPEG-DASH (Dynamic Adaptive Streaming over HTTP)

- Container Formats: The 4 most common container formats and why they matter to you.

- Quality of Experience (QoE) in Video Technology [2022 Guide]