Part II – Applying Docker Tags to ARM

The Journey from x86 to ARM continues

As I covered in the first installment of our Journey from x86 to ARM series, the first major step for migrating a CPU Architecture is to update all relevant library dependencies that are compiled and optimized for one architecture to another. In the case of x86 to ARM, nearly all libraries (distribution packages, python packages, binaries, and custom/open source) needed some kind of update or another because ARM is still relatively new and under-optimized for its capabilities. Check out my first blog post to learn about the value of ARM architectures and how we updated our library dependencies to support them.

The next phase of updating our CPU architecture from x86 to ARM was applying docker tags.

For the rest of the series see

Phase II of implementation: Working with Docker Tags

Now that the encoding service is successfully compiled for ARM, even with some optional features missing, we can still use it for customers without the need for specific dependencies, so, what’s the next step?

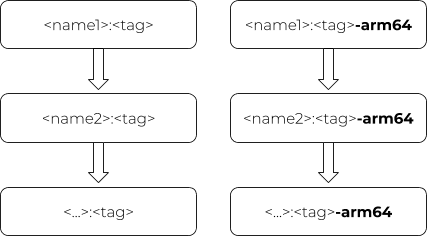

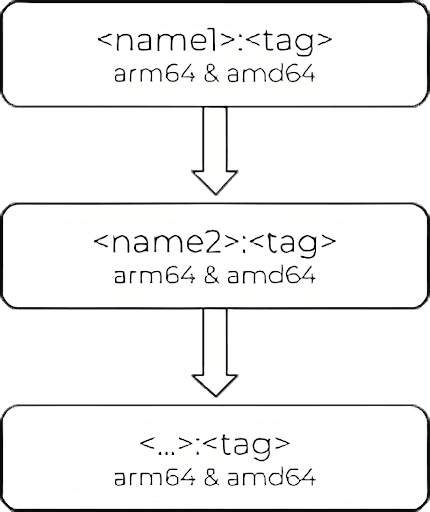

Because all workflows are inside Docker containers and would get pushed to the Docker Hub, the new tags would override the x86 versions. To prevent this override, there are two primary methods, the first option is to append “-arm64” to each docker tag:

However, there is a more sophisticated way to do it – applying the swiss army knife of multiplatform docker images, buildx.

Efficiently pushing information to Docker with buildx

When an image is pushed to Docker Hub or to any docker registry for that matter, not only will the image get pushed, but the associated manifest will provide Docker with more information about the image, like OS and CPU architectures. This additional information lets docker know which platform is needed and will automatically download the correct version for the system currently in use. It is possible to generate the manifest manually, but there is a tool, buildx, that will take care of it. Below, you can see how buildx automatically inputs the appropriate name tags to match the CPU architecture (compared to the manual input method of appending “-arm64” to each tag).

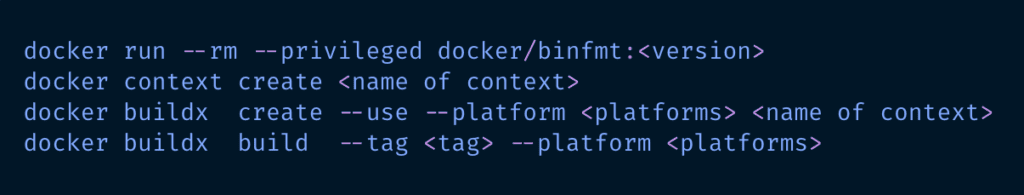

Buildx’s automation process makes it possible to emulate a different platform, without actually having that specific platform, therefore it’s possible to compile for ARM on an x86 machine. With buildx, Docker (using any version newer than 18.09) can compile for any platform with only four commands:

The first command starts QEMU, the open-source machine emulator, which is required to run the cross-compiling feature. Followed by the creation of a normal and a buildx context. The last command might look very similar to the normal build command, but there are some key differences, such as unique storage components and docker drivers that offer multi-platform support. Other differences that do not apply to our specific use-case can be found on the builtx Github page.

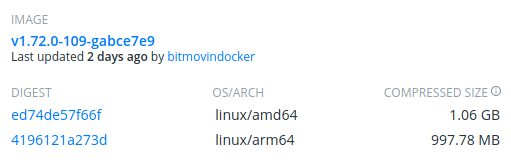

Once all of the commands are complete, if an image is pushed to Docker Hub, then all specified versions and platforms are listed and ready to be used.

Fantastic right? Sadly, there are some drawbacks to this solution. First off, the current version of buildx is more of an experimental feature and provides early access to a future version of a docker build command. Secondly, when buildx builds a docker image without any arguments defining where to export/push it, the image won’t automatically be listed when using the <docker images> command, and it’ll seem like the image was deleted or didn’t work after it was built. This is because buildx uses a different storage component by default. This storage component can differentiate between different CPU architectures, which the default one cannot. Although it’s also possible to use buildx with a default docker driver, it will not support the different CPU architectures. This is why the recommended method is to push multi-platform images directly to a registry, without saving them somewhere.

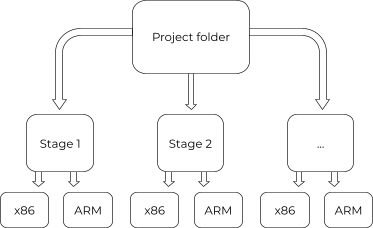

Using CircleCI to speed up compiling

The next problem I faced during the x86 to ARM migration was during the integration phase. I wanted to split ARM and x86 to different machines to make use of the parallel building capabilities of our CI/CD solution CircleCI. When exporting the image, the information about the platform would get lost. So to fix this, I used the <–cache-to> and <–cache-from> arguments that were provided by buildx. The result is that depending on which platform is being used, the cache gets saved in a different folder, as otherwise, everything would be in the same folder and CircleCI would not know which cache to use. This cache is then reused by the following steps.

With this fix, only one machine is necessary to build x86 and while the other builds ARM. Because it is not possible to push both images without overwriting the other one, an additional step is necessary to “sync up” both builds before pushing it to Docker Hub. This step is set up to build both architectures, but when building those, docker noticed that they were already built and their contents are in the cache. So docker simply reused and pushed them to the Docker Hub.

Finally, everything is working (nearly) flawlessly, except one last thing: The build times on ARM. The CircleCI web interface shows that the x86 stage takes 11 minutes, while the same stage for ARM takes nearly three hours, or 18x longer. It is also important to note that the build time is much faster when using ARM natively, but it is additional steps of cross-compiling and emulating with QEMU that slows down the process.

Although it is possible to execute the compiling step for ARM on a machine natively to improve performance, CircleCI doesn’t currently have any ARM machines available. For best practices ARM has a recommended list of CI/CD solutions. So one might consider switching to such systems to improve the build time. Another solution would be to use something like icecream for a cluster of machines.

What’s left to do?

Although our current docker tag set-up is complete, there are a few additional optimizations that can be implemented to further improve our workflows. As previously mentioned, it might be worth exploring other CI/CD solutions to improve build times. Additionally, we can add buildx to the base-container, but to do this, the cache management and how the build stages interact with each other would need to be changed a bit to work with buildx again as our current setup uses caching within one build and not from build to build.

The future of Bitmovin’s encoding in ARM

The ARM CPU infrastructure is one that will remain in place for at least the next few years, as the associated processes are further improved and optimized. However, at the time of this blog post, ARM is just gaining popularity in the cloud computing space and needs significant work. Currently, only AWS offers ARM instances and most proprietary software isn’t available yet. As is the case for most cloud-based products and services, a process that “just runs” is not enough and must be optimized for performance. The final blog post of this series will cover the encoding performance tests that my colleague, René Schaar, ran that tested x264 and x265 on ARM, as compared to Intel & AMD (x86). Stayed tuned to find out the results of the test and the associated costs of running each CPU architecture.

For the rest of the series see