Now that AV1 has entered its final stage of development and is getting close to finalizing its features, it’s a perfect time to take a closer look at what’s in store for the future of video streaming. With Apple announcing their decision to join the Alliance for Open Media in January, practically all major tech leaders are on board and AV1 looks to be in good shape for becoming a widespread standard in the near future. Learn what video encoding advancements are coming in this new open codec in the upcoming webinar on Thursday March 22.

But what makes AV1 stand out technologically? In this posting, we will cover five key tools included in AV1, which have been adopted to help reduce bandwidth demands by up to 30% while still retaining or improving picture quality.

A royalty free solution to match increasing demands in streaming quality and speed

Perhaps AV1’s most important feature is not a technological one: It was designed from the very start to be completely royalty-free, in an effort to provide a truly open video codec capable of providing high quality video streaming at lower bitrates. With the availability of high resolution content constantly increasing and technologies like VR and 360° video on the rise, the need for a suitable, technologically advanced and open codec has become apparent among large-scale content providers. This desire is probably best documented by the fact that virtually all leading industry players and tech companies are contributing members of the Alliance for Open Media, the development foundation behind AV1.

The alliance has set out to finally provide an open standard for internet video streaming, following the path of other open standards like CSS or PNG, which are already shaping our daily digital reality. Bitmovin has been a trailblazer pushing AV1 to become the standard for years to come. Learn more about the development timeline that lead to formation of AV1.

To name an example, Netflix, a major provider and driver of innovation in the industry, has already stated that they expect to be an early adopter of AV1, in addition to their efforts of contributing to the royalty-free development community. Mozilla is another key supporter, providing a successful browser implementation of AV1 for Firefox Nightly (powered by Bitmovin). With practically all big names on board, AV1 seems poised to become the standard for a world of content, which relies on large resolution video, VR and AR applications.

For now, let’s take a closer look at the five key encoding and decoding techniques which make AV1 an interesting choice to use in video streaming.

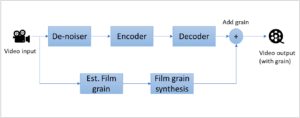

Film grain synthesis

Film grain occurs commonly in photographic film, most noticeably in over-enlarged pictures, but can also be applied digitally for artistic effect. During digital video compression, film grain creates massive problems as it is hard to recognize as such for machines and the constant “noise” creates a lot of traffic in the bitstream. This leads to high bitrate requirements for transmitting very little information. Since the information is of little actual value for the perceived quality – after all the human brain tends to filter visual noise out to some extent – finding a way to not actually transfer the information with the bitstream, but rather re-apply it later, poses a desirable solution.

This idea forms the base for AV1’s film grain synthesis. The goal is to de-noise the initial content before encoding it and then re-adding the noise or grain effect before output during the decoding process. This way, the unnecessary information would not have to be transmitted at all and the overall load of data could be reduced substantially.

The potential in bandwidth savings for content providers from using this technology is enormous. More so for very “noisy” content, which can commonly occur in old video footage that has been digitized or in videos, which use film grain for artistic reasons. Either way, this tool can be used to great effect and forms a key benefit in AV1’s list of features.

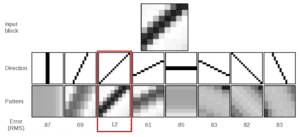

Constrained Directional Enhancement Filter

Filtering is an essential process in every video codec, as it drastically increases the perceived quality of the encoded video. It mostly occurs along the outlines of each of the blocks, which are used to divide each picture into smaller sub-units during the compression process. AV1 contains various sets of filters, most of which are derived from existing codecs. The Constrained Directional Enhancement Filter (CDEF) is quite possibly the most impactful addition to the range of filters. This filter basically merges two existing filters: a directional de-ringing filter as used in the Daala video codec and the constrained low pass filter (CLPF) from the Thor video codec. CLPF is applied to filter out artifacts which stem from quantization errors and have not been corrected through the preceding application of a de-blocking filter. The directional de-ringing filter works by recognizing edges within each block and identifying their orientation. It then conditionally applies a directional low-pass filter along those edges, resulting in a smoother picture and an increase in perceived quality.

CDEF merges the two filters and works by analyzing the contents of each block, smoothing out artifacts along edges and de-blocking the picture. The search for the filtering parameters (direction and variance) is applied on the decoder’s end, after the actual video has already been encoded. The filtering process is also performed by the encoder, in order to get the correct reference frames. Since the filtering operation can be run on the consumer’s hardware, required network bandwidth can be reduced and with it the traffic load.

Warped motion and global motion compensation

Predicting and compensating motions is an important principle in video compression, as it allows for the reduction of redundant information, which would otherwise be part of the bitstream and thus increase the amount of data being transmitted. As such, motion compensation works by recognizing and anticipating movement patterns within frames and blocks and in turn, reducing the relevant information for the coding process to the required minimum.

Warped motion compensation is a particularly interesting technique, as it anticipates movement patterns in three dimensions, predicting spatial movement trajectories within videos. Based on the calculated predictions, redundant information is identified and omitted in the coding process, resulting in a significant reduction to the required load of data.

Global motion compensation predicts motions for an entire frame (e. g. camera movement, zooming sequences etc.) and uses these analyses to limit the amount of information transmitted in the bitstream. Basically, information is condensed to statements like “move all blocks right” or “pan this block”, thus saving data.

Motion compensation algorithms have been used and theorized upon for a while, but only on a two-dimensional level. AV1 marks the first time that non-planar motion compensation has been implemented into a video codec. Due to the constant increase in processing power of consumer devices, this technique is now ready to see use in mass-market applications.

These techniques work extremely well for predicting large area movements, like background motion or camera movements. Additionally, they can handle consistent backgrounds and color schemes very effectively, which is one of the reasons why animated videos tend to deliver great encoding results, even with very high levels of compression.

Increased coding unit size (up to 128×128)

As video resolutions keep getting larger, an increase in block size is an effective way to scale the compression process along with high resolution contents. Each frame is partitioned up into individual coding units (or blocks), which are then processed individually during the coding procedure. Consequently, small resolutions like 1280×720 (720p) can be divided into blocks with an individual size of 64×64 quite easily, whereas the same block size yields less practicality for large resolutions like 7680×4320 (8k UHD).

Non-binary arithmetic coding

This technique marks an interesting change from other current codecs like HEVC or AVC. For those, every symbol which is entered into the arithmetic coding engine has to be binary. With AV1, these symbols can also be non-binary, meaning that they can have up to eight possible values instead of just two. The symbols are then processed by the arithmetic coding engine, which produces a binary bitstream as output. Both ends, encoder and decoder, operate using probability calculations to estimate how many output bits will be created from a given symbol. Theoretically, any given input symbol could therefore produce multiple bits or even just a fraction of a bit.

Although non-binary coding renders the coding process more complex by combining multiple values into a single symbol, it is still less complex than if it were one bit per symbol. One major benefit lies in the possibility to process more symbols per clock cycle using this procedure. As clock cycles have to be performed serially, non-binary coding achieves improvements by allowing multiple symbols to be handled during each serial cycle.

Where is AV1 headed?

As the final stages of development are winding down, it seems not too far-fetched to assume that AV1 is going to have massive impact on the world of video streaming in the near future. User demand for high quality video streaming is already more than just tangible and the coming generation of high resolution mobile devices and VR-enabled gadgets are about to push their way into mainstream availability. Seeing new technologies emerge and pave their way into our everyday lives is a fascinating process and AV1 will likely shape up to be a major factor in structuring our digital realities going forward.

AV1 is the next generation video codec and is on track to deliver a 30% improvement over VP9 & HEVC – Learn about Bitmovin and AV1