For online prediction in live streaming applications, selecting low-complexity features is critical to ensure low-latency video streaming without disruptions. For each frame/ video/ video segment, two features, i.e., the average texture energy and the average gradient of the texture energy are determined. A DCT-based energy function is introduced to determine the block-wise texture of each frame. The spatial and temporal features of the video/ video segment are derived from the DCT-based energy function. The Video Complexity Analyzer (VCA) project is launched in 2022, aiming to provide the most efficient, highest performance spatial and temporal complexity prediction of each frame/ video/ video segment which can be used for a variety of applications like shot/scene detection, online per-title encoding.

What is the Video Complexity Analyzer

The primary objective of the Video Complexity Analyzer is to become the best spatial and temporal complexity predictor for every frame/ video segment/ video which aids in predicting encoding parameters for applications like scene-cut detection and online per-title encoding. VCA leverages x86 SIMD and multi-threading optimizations for effective performance. While VCA is primarily designed as a video complexity analyzer library, a command-line executable is provided to facilitate testing and development. We expect VCA to be utilized in many leading video encoding solutions in the coming years.

VCA is available as an open-source library, published under the GPLv3 license. For more details, please visit the software online documentation here. The source code can be found here.

Heatmap of spatial complexity (E)

Heatmap of temporal complexity (h)

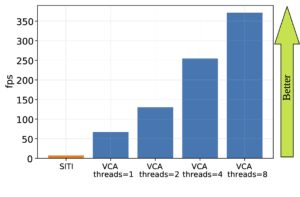

A performance comparison (frames analyzed per second) of VCA (with different levels of threading enabled) compared to Spatial Information/Temporal Information (SITI) [Github] is shown below

How to Build a Video Complexity Analyzer

The software is tested mostly in Linux and Windows OS. It requires some pre-requisite software to be installed before compiling. The steps to build the project in Linux and Windows are explained below.

Prerequisites

- CMake version 3.13 or higher.

- Git.

- C++ compiler with C++11 support

- NASM assembly compiler (for x86 SIMD support)

The following C++11 compilers have been known to work:

- Visual Studio 2015 or later

- GCC 4.8 or later

- Clang 3.3 or later

Execute Build

The following commands will check out the project source code and create a directory called ‘build’ where the compiler output will be placed. CMake is then used for generating build files and compiling the VCA binaries.

$ git clone https://github.com/cd-athena/VCA.git $ cd VCA $ mkdir build $ cd build $ cmake ../ $ cmake --build .

This will create VCA binaries in the VCA/build/source/apps/ folder.

Command-Line Options

General

Displaying Help Text:

--help, -h

Displaying version details:

--version, -v

Logging/Statistic Options

--complexity-csv <filename>

Write the spatial (E) and temporal complexity (h), epsilon, brightness (L) statistics to a Comma Separated Values log file. Creates the file if it doesn’t already exist. The following statistics are available:

- POC Picture Order Count – The display order of the frames

- E Spatial complexity of the frame

- h Temporal complexity of the frame

- epsilon Gradient of the temporal complexity of the frame

- L Brightness of the frame

Unless option:–no-chroma is used, the following chroma statistics are also available:

- avgU Average U chroma component of the frame

- energyU Average U chroma texture of the frame

- avgV Average V chroma component of the frame

- energyV Average V chroma texture of the frame

--shot-csv < filename>

Write the shot id, the first POC of every shot to a Comma Separated Values log file. Creates the file if it doesn’t already exist.

--yuvview-stats <filename>

Write the per block results (L, E, h) to a stats file that can be visualized using YUView.

Performance Options

--no-chroma

Disable analysis of chroma planes (which is enabled by default).

--no-simd

The Video Complexity Analyzer will use all detected CPU SIMD architectures by default. This will disable that detection.

--threads <integer>

Specify the number of threads to use. Default: 0 (autodetect).

Input/Output

--input <filename>

Input filename. Raw YUV or Y4M supported. Use stdin for stdin. For example piping input from ffmpeg works like this:

ffmpeg.exe -i Sintel.2010.1080p.mkv -f yuv4mpegpipe - | vca.exe --input stdin

--y4m

Parse input stream as YUV4MPEG2 regardless of file extension. Primarily intended for use with stdin. This option is implied if the input filename has a “.y4m” extension

--input-depth <integer>

Bit-depth of input file or stream. Any value between 8 and 16. Default is 8. For Y4M files, this is read from the Y4M header.

--input-res <wxh>

Source picture size [w x h]. For Y4M files, this is read from the Y4M header.

--input-csp <integer or string>

Chroma Subsampling. 4:0:0(monochrome), 4:2:0, 4:2:2, and 4:4:4 are supported. For Y4M files, this is read from the Y4M header.

--input-fps <double>

The framerate of the input. For Y4M files, this is read from the Y4M header.

--skip <integer>

Number of frames to skip at start of input file. Default 0.

--frames, -f <integer>

Number of frames of input sequence to be analyzed. Default 0 (all).

Analyzer Configuration

--block-size <8/16/32>

Size of the non-overlapping blocks used to determine the E, h features. Default: 32.

--min-thresh <double>

Minimum threshold of epsilon for shot detection.

--max-thresh <double>

Maximum threshold of epsilon for shot detection.

Using the VCA API

VCA is written primarily in C++ and x86 assembly language. This API is wholly defined within :file: vcaLib.h in the source/lib/ folder of our source tree. All of the functions and variables and enumerations meant to be used by the end-user are present in this header.

vca_analyzer_open(vca_param param)

Create a new analyzer handler, all parameters from vca_param are copied. The returned pointer is then passed to all of the functions pertaining to this analyzer. Since vca_param is copied internally, the user may release their copy after allocating the analyzer. Changes made to their copy of the param structure have no affect on the analyzer after it has been allocated.

vca_result vca_analyzer_push(vca_analyzer *enc, vca_frame *frame)

Push a frame to the analyzer and start the analysis. Note that only the pointers will be copied but no ownership of the memory is transferred to the library. The caller must make sure that the pointers are valid until the frame was analyzed. Once a results for a frame was pulled the library will not use pointers anymore. This may block until there is a slot available to work on. The number of frames that will be processed in parallel can be set using nrFrameThreads.

bool vca_result_available(vca_analyzer *enc)

Check if a result is available to pull.

vca_result vca_analyzer_pull_frame_result(vca_analyzer *enc, vca_frame_results *result)

Pull a result from the analyzer. This may block until a result is available. Use vca_result_available() if you want to only check if a result is ready.

void vca_analyzer_close(vca_analyzer *enc)

Finally, the analyzer must be closed in order to free all of its resources. An analyzer that has been flushed cannot be restarted and reused. Once vca_analyzer_close() has been called, the analyzer handle must be discarded.

Try out the video complexity analyzer for yourself, amongst other exciting innovations both at https://athena.itec.aau.at/ and bitmovin.com