Welcome back to Bitmovin’s Encoding Excellence series! Our first post covered why video quality metrics matter. The second post provided an “objective” introduction to the top three objective video quality metrics (see what we did there?). The third post in our Encoding Excellence series is an exploration of quality measurement methods (and their limitations) through the eyes of Bitmovin’s solutions architect and “encoding guru”, Richard Fliam, who questioned specific use cases of quality metrics at the 2019 Demuxed conference in San Francisco, CA. In his research and presentation, Richard set out to “break Video Multimethod Assessment Fusion (VMAF)”.

Before we begin, we do have a shameless plug for the next content piece of our Encoding Excellence series – our free to attend Webinar, Objective Video Quality: Measurements, Methods, and Best Practices.

Human Perception vs A. “Eye”/Machine Learning

The human perception system, our eyes, have around two trillion synapses, about 2000x the number of parameters of today’s largest machine learning models. Due to the vast difference in perception capabilities, it is incredibly complex (if not impossible) to condense into a fool-proof algorithm of quality assurance, as a result, current solutions will oftentimes fall short on their promise to achieve perceived quality at scale. And while current approaches to objective quality assessment aim to mimic human perception, is the outcome good enough to be a valid substitute or can scores like VMAF be misleading?

In his talk Richard “attacks” many methodologies, but also points out why most existing metrics are still valid – as long they’re reviewed and utilized from an objective point-of-view.

Richard’s Top 4 Objectionable Quality Checks

Through his keynote Richard identifies four necessary steps (in no defined order) to objectively check the accuracy of an individual quality metric. These steps are defined below:

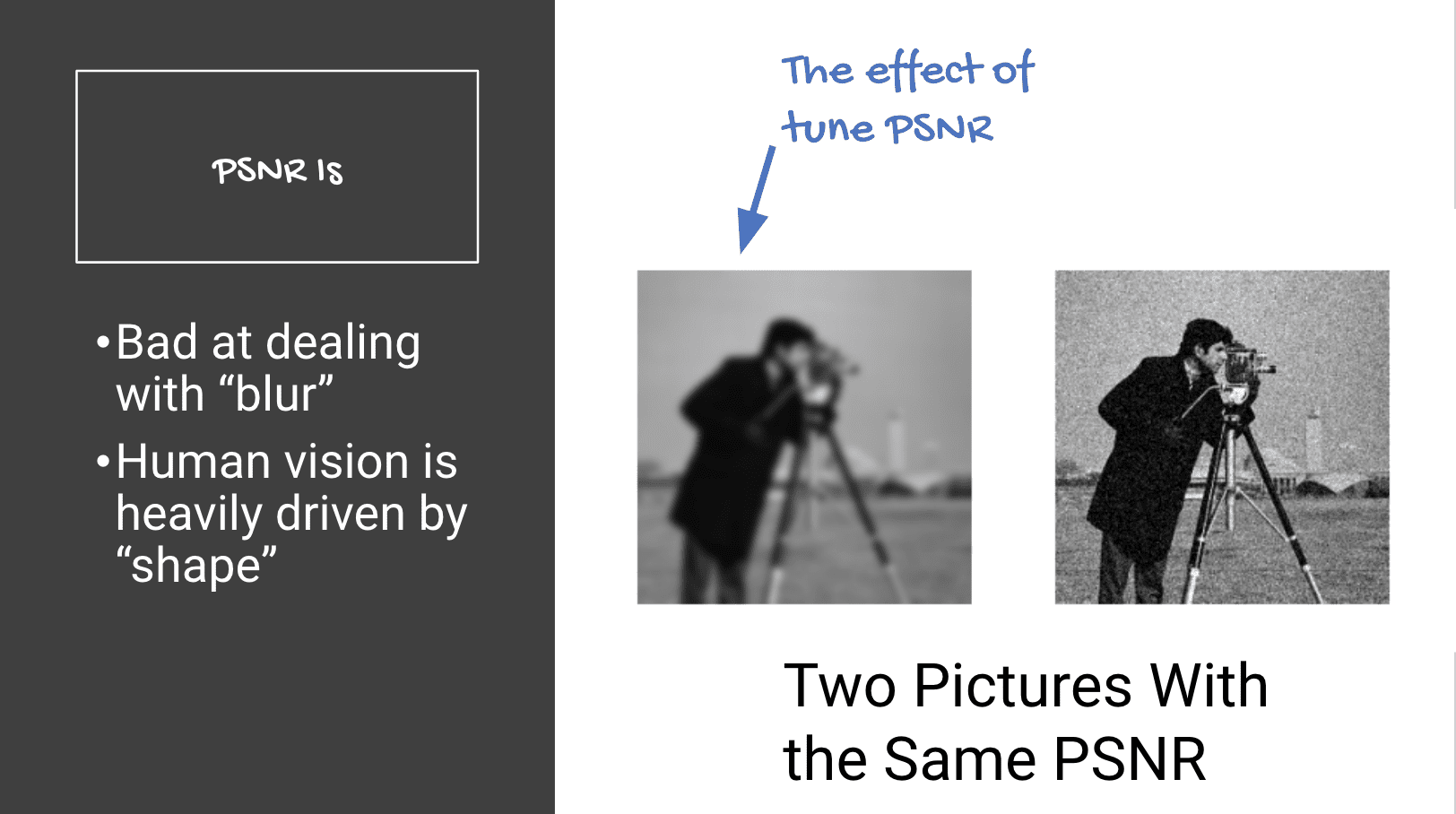

- Check the outputs of your algorithm with real people (at least the very bad ones and suspiciously good ones) – The average human can identify that the stubble on an actor’s cheeks is far more pronounced than dirt on the driveway, but to metrics like the Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index (SSIM) they are the same thing.

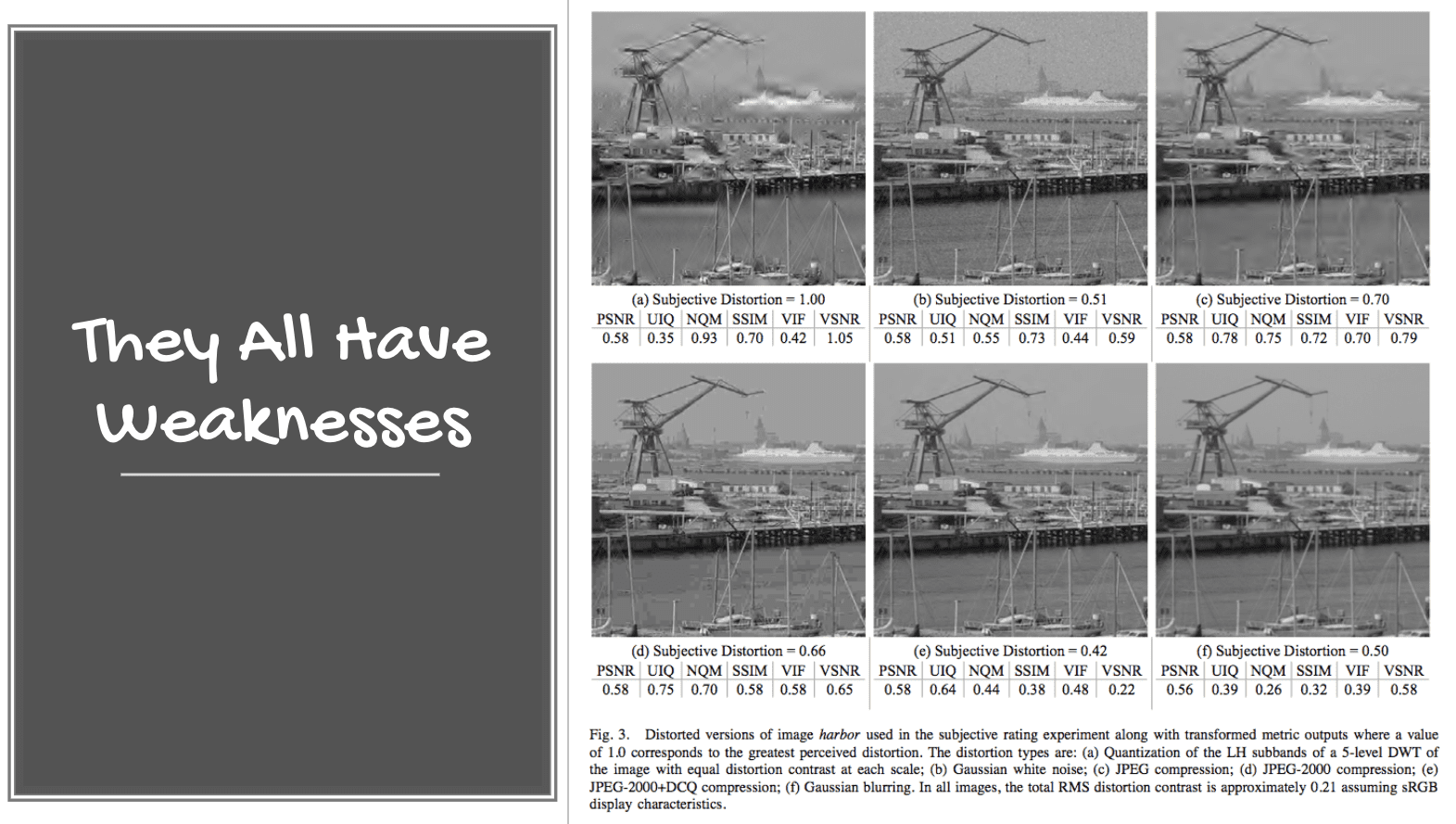

- Know your content and know the weaknesses – where the metric does not perform well.

For example: PSNR deals very poorly with “blurring” and a host of other artifacts

- Ensure you’re comparing the same frames. Account for rate changes, deinterlacing, changes in colorspace or pixel format and filters. Not all encoders do that.

- Last but not least, build better, quicker tools to compute objective video quality metrics.

At the end of the day, it is too time consuming and impractical to have humans watch thousands of possible encodes of a video.

Image artifacts, blockiness, and blurriness do not make for a good quality of experience, so there is no way around to monitor for these ailments. As we’ve seen, there is a compelling argument to be made for why objective measurements are not perfect replacements for subjective visual testing, and why a holistic approach is preferred when possible. However, with the mass amounts of stream data, countless devices to assuring quality, and time-sensitive operations encoding engineers are faced with, it is vital they are equipped with a clear understanding of objective quality measurements and strategically deploy them.

Breaking VMAF

So, how did Richard attempt to break VMAF? We’ve broken down his steps and take-aways below:

- For PSNR I can blur the image.

- For SSIM I can muck with textures and gradients, but VMAF tried really hard to prevent that. I ran the VMAF scores on some videos, and sure enough, the VMAF was the same between both algorithms. But by watching them, it was pretty clear one was better than the other.

- Now, I looked into how VMAF was computed. And one thing that stood out is that VMAF uses a simple arithmetic mean to express the “average” quality of all the frames in a video or sequence.

- So I plotted violin graphs on top of our RD charts – distribution flipped on its side and mirrored.

- Then I added our new two-pass and three-pass for these videos, something interesting and clear about these sequences appeared.

- We had the same average, but a far better distribution of frames. Those “glut” of frames with very high quality, were lower, and so were the glut of frames with very low quality.

- It’s actually pretty intuitive that people prefer a video sequence where there are more frames at the “average” than split between high and low.

All metrics share a common goal – They want to order videos by perceived quality, what mathematicians call “total order”. Ex: Video sequence A_1 is better than A_2 and that A_3 is better than A_2 – which means A_2 is better than A_1. Most also want to include equality, which is why we talk about “just noticeable differences”.

Broken VMAF Learnings

So what’s the takeaway – Deciding something is better than something else is, well a decision. Now sometimes we phrase these as prediction problems (predict on a scale of 0 to 100 what the perceived quality of the video is), but when it comes to encoding it’s a decision problem. Which of these two methods is better, or are equal. You’ll notice deciding on the order in the quality of two videos on the basis of almost entirely based on subjective methods in addition to the objective metrics.

Click here for the full slide-deck from Richard’s Presentation

Future of Video Quality Metrics

Knowing what we do now – Bitmovin is putting a webinar together, featuring thought leaders on video quality assessment to dive deep into the science behind objective quality scoring. The main topics include: when quality metrics will be necessary and best practices to follow when measuring for objective quality.

Watch our webinar discussion on-demand, Objective Video Quality: Measurements, Methods, and Best Practices. The session features Sean McCarthy, Bitmovin’s technical product marketing manager in a discussion with Carlos Bacquet, Solutions Architect at SSIMWAVE, and Jan Ozer a leading expert on H.264 encoding for live and on-demand production.

For other great readings check out the following links:

- Jan Ozer’s Per-Title Encoding Analysis [Whitepaper]

- Per-Title Encoding [Whitepaper]

- Get Ready for a Multi-Codec World [Blog]

- Fun with Container Formats [Blog – Series]