This article was co-authored by Dalet’s Solutions Architect, Brett Chambers, and Bitmovin’s Solution Director APAC, Adrian Britton

Not all content is created equal, especially when you’re a publishing house and your content arrives from multiple sources. But that doesn’t mean that your viewer’s quality of experience (QoE) needs to suffer as a result.

In this blog post, we discuss some of the typical failure modes that we see in mezzanine content, and how the combination of Dalet’s Ooyala Flex Media Platform and Bitmovin’s Encoding joint solution can help mitigate them with technical metadata, black bar removal, deinterlacing, colour correction, and more! We cover some of the top issues that affect a viewer’s quality of experience and how our solutions can help your organization resolve them.

Quality Matters – Factors that affect viewer experience

There are countless factors that can negatively affect your subscribers’ experience – luckily most of them can be resolved with a simple combination of accurate meta-data and specific dashboard inputs. For your convenience we’ve organized the top six factors that will make the most positive impact on your workflow:

Black Bar removal

Perhaps one of the most noticeable factors that may affect a viewer’s Quality of Experience is the addition of those pesky Black Bars that appear on both sides of a video player during playback. Bars or letter-box artifacts occur when an asset of a non-conforming aspect ratio is introduced somewhere in the workflow. A mezzanine asset typically would not see this, but where content has moved through upstream systems – the likelihood increases. Typically Bitmovin’s tools initiate a black bar removal if either an asset’s technical metadata requires it or the Ooyala Flex ingest path determines it necessary.

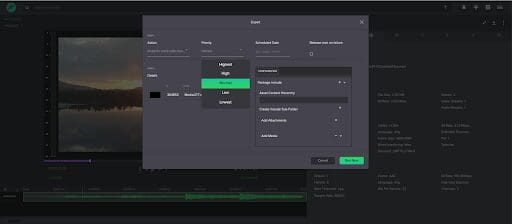

For correction, Bitmovin encode contains a cropping filter, which can be controlled through the Ooyala Flex Media Platform to remove the required pixels or frame percentage, thus correcting the image. You can see this process below:

[bg_collapse view=”button-blue” color=”#f7f5f5″ icon=”eye” expand_text=”Show Bitmovin API Reference” collapse_text=”Hide Bitmovin API Reference” ]

CropFilter{

| id* | string readOnly: true example: cb90b80c-8867-4e3b-8479-174aa2843f62

Id of the resource

|

| name | string example: Name of the resource

Name of the resource. Can be freely chosen by the user.

|

| description | string example: Description of the resource

Description of the resource. Can be freely chosen by the user.

|

| createdAt | string($date-time) readOnly: true example: 2016-06-25T20:09:23.69Z

Creation timestamp formatted in UTC: YYYY-MM-DDThh:mm:ssZ

|

| modifiedAt | string($date-time) readOnly: true example: 2016-06-25T20:09:23.69Z

Modified timestamp formatted in UTC: YYYY-MM-DDThh:mm:ssZ

|

| customData | string writeOnly: true additionalProperties: OrderedMap { “type”: “object” }

User-specific meta data. This can hold anything.

|

| left | integer example: 0

Amount of pixels which will be cropped of the input video from the left side.

|

| right | integer example: 0

Amount of pixels which will be cropped of the input video from the right side.

|

| top | integer example: 0

Amount of pixels which will be cropped of the input video from the top.

|

| bottom | integer example: 0

Amount of pixels which will be cropped of the input video from the bottom.

|

| unit | PositionUnitstring title: PositionUnit default: PIXELSEnum: Array [ 2 ] |

[/bg_collapse]

Some of the aspect ratio issues that may come up within the Pixel Aspect Ratio (PAR), Storage Aspect Ratio (SAR), and/or Display Aspect Ratio (DAR). The ideal AR should be as follows:

- PAR: the aspect ratio of the video pixels themselves. For 576i, it’s 59:54 or 1.093.

- SAR: the dimensions of the video frame, expressed as a ratio. For a 576i video, this is 5:4 (720×576).

- DAR: the aspect ratio the video should be played back at. For SD video, this is 16:9.

Technical metadata detection within Ooyala Flex will, in most cases, correctly determine the aspect ratio characteristics, allowing the encoding profile in Bitmovin’s dashboard to be adjusted automatically. These are all characteristics of a video asset. Getting it wrong either in detection, encoding, or playback results in squashed video playback.

Colour-Correction

Bitmovin’s encoder contains a powerful set of color space, color range, and color primary manipulation logic. While not a full color-grading solution, the encoding workflow can easily be modified to correct for color issues commonly found in mezzanine formats.

[bg_collapse view=”button-blue” color=”#f7f5f5″ icon=”eye” expand_text=”Show Bitmovin API Reference” collapse_text=”Hide Bitmovin API Reference” ]

ColorConfig{

|

|||||||||||||||||||||||||

[/bg_collapse]

Deinterlacing

Interlacing or deinterlacing can be used to drastically improve the visual performance of your fast-moving content. When manipulating these aspects of the encode it’s important to have a full view of the input asset making the decision to trigger these ‘filters’ for either content of a particular source, a particular technical metadata characteristic, or as part of a human-driven QA process.

[bg_collapse view=”button-blue” color=”#f7f5f5″ icon=”eye” expand_text=”Show Bitmovin API Reference” collapse_text=”Hide Bitmovin API Reference” ]

DeinterlaceFilter{

| id* | string readOnly: true example: cb90b80c-8867-4e3b-8479-174aa2843f62 Id of the resource |

| name | string example: Name of the resource Name of the resource. Can be freely chosen by the user. |

| description | string example: Description of the resource Description of the resource. Can be freely chosen by the user. |

| createdAt | string($date-time) readOnly: true example: 2016-06-25T20:09:23.69Z Creation timestamp formatted in UTC: YYYY-MM-DDThh:mm:ssZ |

| modifiedAt | string($date-time) readOnly: true example: 2016-06-25T20:09:23.69Z Modified timestamp formatted in UTC: YYYY-MM-DDThh:mm:ssZ |

| customData | string writeOnly: true additionalProperties: OrderedMap { “type”: “object” } User-specific meta data. This can hold anything. |

| parity | PictureFieldParitystring title: PictureFieldParity default: AUTO Specifies which field of an interlaced frame is assumed to be the first one Enum: Array [ 3 ] |

| mode | DeinterlaceModestring title: DeinterlaceMode default: FRAME Specifies the method how fields are converted to frames Enum: Array [ 4 ] |

| frameSelectionMode | DeinterlaceFrameSelectionModestring title: DeinterlaceFrameSelectionMode default: ALL Specifies which frames to deinterlace Enum: Array [ 2 ] |

| autoEnable | DeinterlaceAutoEnablestring title: DeinterlaceAutoEnable default: ALWAYS_ON Specifies if the deinterlace filter should be applied unconditionally or only on demand. Enum: Array [ 3 ] |

[/bg_collapse]

Conformance (FPS)

Source content is unlikely to always be captured at the same frame-rate. US-sourced content can range from 29.97 FPS to capture rate of 25/50 FPS – or even faster! Although the normal role of the encoder is to conform all inputs to a given frame-per-second, especially when ad-insertion is used, there are also some use cases where certain content coming through certain workflows need to maintain different (and higher) values.

[bg_collapse view=”button-blue” color=”#f7f5f5″ icon=”eye” expand_text=”Show Bitmovin API Reference” collapse_text=”Hide Bitmovin API Reference” ]

ConformFilter{

| id* | string readOnly: true example: cb90b80c-8867-4e3b-8479-174aa2843f62 Id of the resource |

| name | string example: Name of the resource Name of the resource. Can be freely chosen by the user. |

| description | string example: Description of the resource Description of the resource. Can be freely chosen by the user. |

| createdAt | string($date-time) readOnly: true example: 2016-06-25T20:09:23.69Z Creation timestamp formatted in UTC: YYYY-MM-DDThh:mm:ssZ |

| modifiedAt | string($date-time) readOnly: true example: 2016-06-25T20:09:23.69Z Modified timestamp formatted in UTC: YYYY-MM-DDThh:mm:ssZ |

| customData | string writeOnly: true additionalProperties: OrderedMap { “type”: “object” } User-specific meta data. This can hold anything. |

| targetFps | number($double) example: 25 The FPS the input should be changed to. |

[/bg_collapse]

Post/Precuts

Rarely will content start exactly where you want it to, be it color-bars or lead-in titles, or simply a longer form recording that runs too long. Being able to clip out a beginning by X seconds and clip out an end at Y seconds can avoid the costly exercise of offlining content for craft editing. The Ooyala Flex-powered workflow can control clip-in and clip-out parameters, automating ingest where required.

Audio-Levelling

Some content is loud, some content is quiet. The audio-filter allows all or selected content to have its volume adjusted, thereby creating a uniform viewer experience.

[bg_collapse view=”button-blue” color=”#f7f5f5″ icon=”eye” expand_text=”Show Bitmovin API Reference” collapse_text=”Hide Bitmovin API Reference” ]

AudioVolumeFilter{

| id* | string readOnly: true example: cb90b80c-8867-4e3b-8479-174aa2843f62 Id of the resource |

| name | string example: Name of the resource Name of the resource. Can be freely chosen by the user. |

| description | string example: Description of the resource Description of the resource. Can be freely chosen by the user. |

| createdAt | string($date-time) readOnly: true example: 2016-06-25T20:09:23.69Z Creation timestamp formatted in UTC: YYYY-MM-DDThh:mm:ssZ |

| modifiedAt | string($date-time) readOnly: true example: 2016-06-25T20:09:23.69Z Modified timestamp formatted in UTC: YYYY-MM-DDThh:mm:ssZ |

| customData | string writeOnly: true additionalProperties: OrderedMap { “type”: “object” } User-specific meta data. This can hold anything. |

| volume* | number($double) example: 77.7 Audio volume value |

| unit* | AudioVolumeUnitstring title: AudioVolumeUnit The unit in which the audio volume should be changed Enum: [ PERCENT, DB ] |

| format | AudioVolumeFormatstring title: AudioVolumeFormat Audio volume format Enum: [ U8, S16, S32, U8P, S16P, S32P, S64, S64P, FLT, FLTP, NONE, DBL, DBLP ] |

[/bg_collapse]

How will you overcome Quality of Experience issues?

To summarize there are six key factors that often affect a user’s QoE: black bars, poor coloring, incorrect lacing for visual quality, non-optimized FPS, content length, and inappropriate audio volume. So, how do you overcome the most glaring Quality of Experience issue: aspect ratio-related issues?

How to detect Aspect Ratio-related Quality of Experience issues

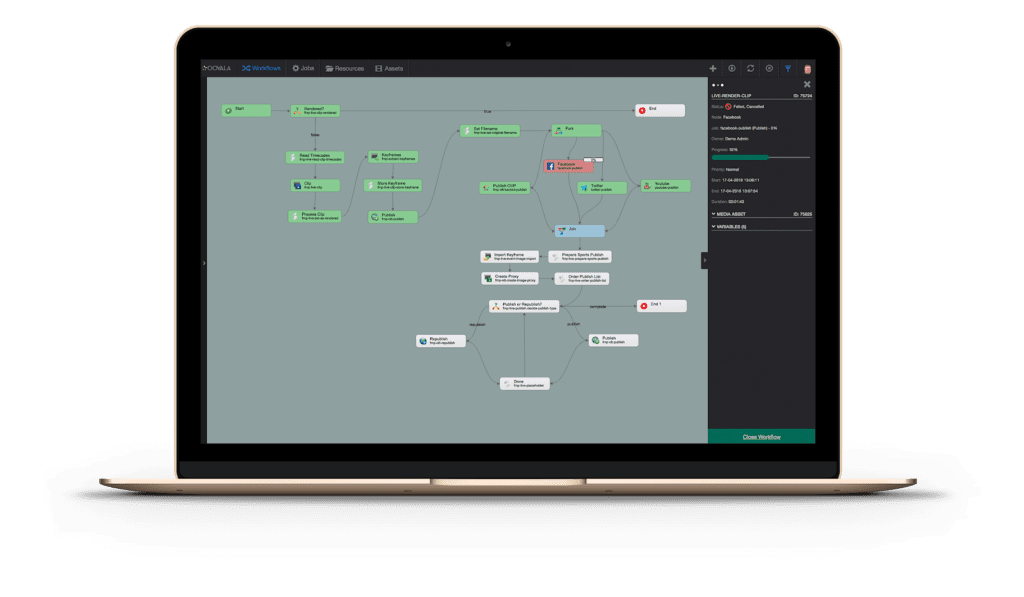

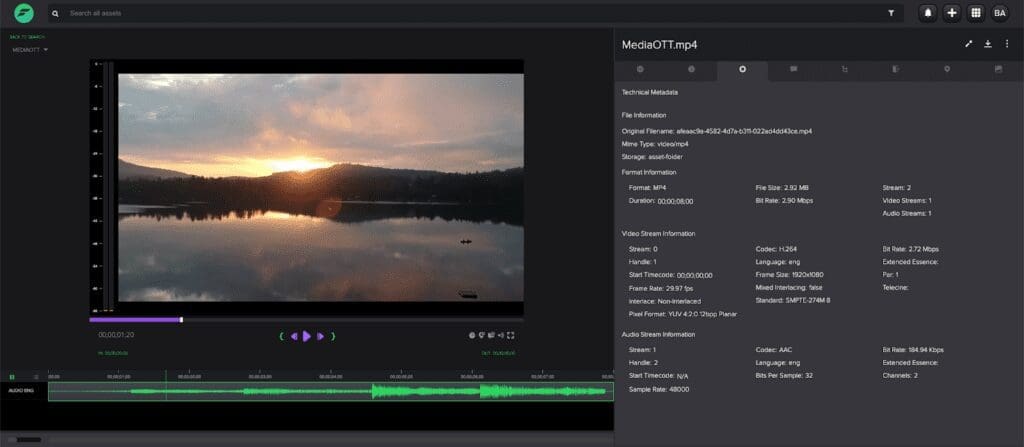

When it comes to content preparation, everything really starts with the Ooyala Flex Media Platform, extracting Technical Metadata from incoming media. This critical step extracts information such as format, framerate, frame size, colour space, D.A.R., P.A.R., codecs, bitrates, and specific details for codecs in use (e.g. GOP structures, profiles, audio sample rates and bit-depths), audio track counts, timecode start time and duration.

This wealth of technical information stored as metadata against the media asset can be easily utilised throughout any workflow orchestration process, enabling the construction of bespoke validation criteria, ensuring the media is compliant. If validation happens to fail, workflow orchestration can take steps to rectify any issue automatically.

The evaluation of the technical side of our media is a great start, but what about validation of the media essence?

The Ooyala Flex Media Platform incorporates a number of tools that allow clients to effortlessly integrate with external products; a perfect example of this integration is for automated quality control, such as Dalet AmberFin. Automated QC reports from external products can be analysed by Ooyala Flex, workflow orchestration can take remedial action to correct any QC issues, and submit corrected media to automated QC again to ensure compliance.

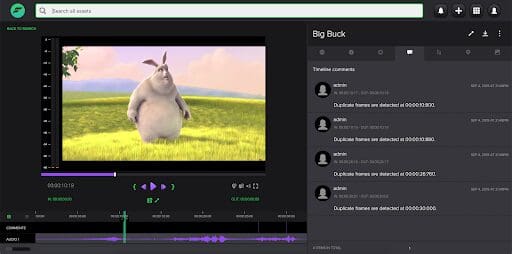

In addition to automated QC, we also have the ability to create tasks for users to perform manual QC. Manual QC tasks can even be augmented from a previous automated QC run by highlighting ‘soft errors’ as temporal metadata annotations in a timeline ‘Review’. This orchestrated usage of human intervention helps ensure that our workflow does not stall, deadlines are met, and most importantly, quality does not suffer.

For more information on the combined Dalet + Bitmovin capabilities, you can watch the recording of our most recent joint webinar here, and if you’d like to further discuss how to best address your Quality of Experience issues, do reach out: