Leveraging microservices and containers to create an advanced, scalable and powerful video encoding service.

Video encoding is a complex and compute intensive task which is well suited to leverage the cloud thanks to its ability to quickly scale compute intensive operations. In other words, you can get massive computing power that scales very quickly to deal with the heavy requirements of encoding software.

Until recently, however, the majority of cloud solutions have operated as large, monolithic cloud services that require one or more discrete servers to operate (or an entire virtualized operating system for each task). This leads to relatively high operation costs and large integrated code bases that can be difficult to maintain. Furthermore, many commercial cloud services only allow access to their product through a single, bulky API, making it difficult to allow customers to use and fine tune individual services or manage the cost structure to allow the service to scale for their needs.

Microservices and containers to the rescue

Enter microservices and containers: these programming patterns and deployment structures allow developers to more cost effectively harness the power of cloud computing while reducing code base complexity. Further, customers of previously monolithic commercial cloud services can now leverage containerized versions to more effectively take advantage of their existing computing resources — whether on premise or in the cloud.

But what exactly are microservices and containers? And how do they help ship high quality, reliable code?

What’s the difference between microservices and containers?

Let’s start with some definitions. The pattern of “microservices” is a type of software architecture, designed to work with fine-grained, portable services and lightweight protocols to ensure a high level of modularity and flexibility. This allows for an architecture that works well with automated testing and automated deployment, as each microservice can be modified individually enabling updates and rollouts with virtually no downtimes.

Containers, on the other hand are lightweight, stand-alone, executable packages of a piece of software that includes everything needed to run it: code, runtime, system tools, system libraries, and settings. Microservices are often packaged and run inside of containers. They provide everything a given service needs to run, but put the software in isolation from other parts of the operating systems or other services running on the same machine. This enables a precise attribution of processing resources on operating-system-level, so that processes can be managed efficiently. Docker is a popular software containerization platform used by Bitmovin to implement a “chunk-based” approach to video encoding in a cloud environment.

Why are microservices and containers useful?

Compared to monolithic style architectures where code and library dependencies are more static in nature and the whole construct grows with the expanding complexity, software architecture based on microservices offers two major benefits:

- Deployment: Monolithic-based structures tend to grow too large to keep up with short release cycles. Microservices allow for shorter release cycles as every single service can be updated individually. This way, updates can be deployed almost instantly and without service interruptions.

- Engineering culture: Microservices enable development teams to work relatively independently and in different locations. Teams can be organized more loosely, tasks and responsibilities can be split up among them more effectively.

In a software architecture based on microservices, only APIs are exposed externally which means that the processes delivering up to the API can be organized and arranged freely. For example, at Bitmovin we created our video encoding platform as a service-based architecture from the very beginning. The encoders used are each microservices which can be scaled horizontally and have been designed to provide a high degree of portability and flexibility.

Containers as an alternative to monolithic third-party cloud services

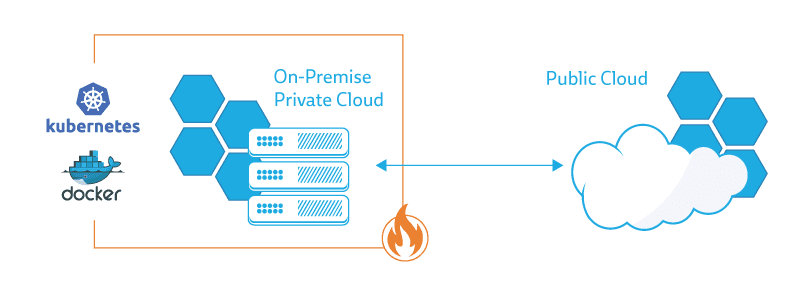

One of the newest trends making use of the container model is to allow third-party applications to run in your own server environment deployed as nicely packaged containers. Instead of accessing a cloud-based service through a single API and paying a volume-based usage fee, instead you can pay a fixed software license fee and then leverage your existing server infrastructure resulting in potentially significant savings.

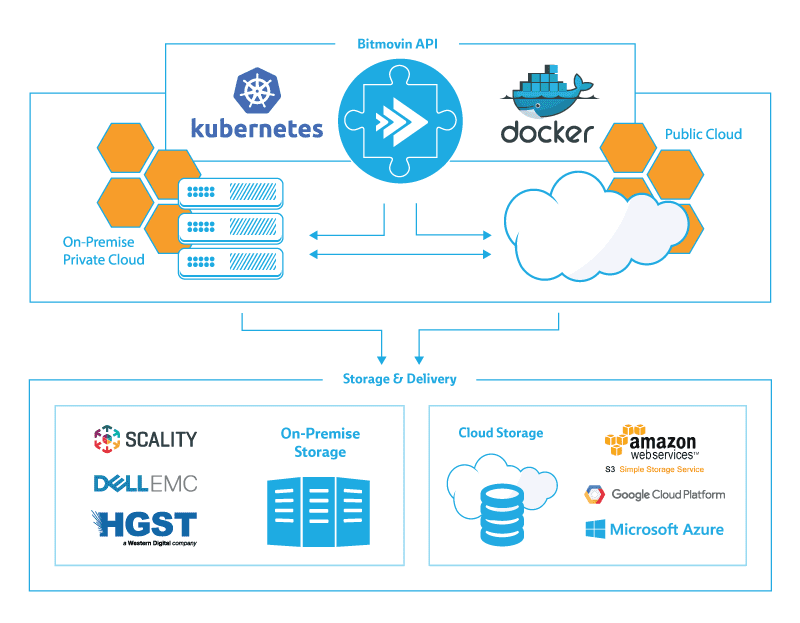

For example, Bitmovin’s encoding services are available both as a cloud-based API and as a downloadable containerized package that can run on your own infrastructure. This allows you to determine the right mixture of deployment environment depending on your needs — ranging from a shared cloud like Amazon Web Services to on-premise with your own hardware, or even a hybrid combination of both.

Another important advantage of containerized deployment of third-party services is that they are by design capable of running virtually anywhere – on commodity hardware, on heterogeneous clusters, on homogenous clusters, on high-performing instances or on weaker instances.

Using containerized encoding instances with on-premise commodity hardware provides a very compelling cost savings when compared to traditional dedicated broadcast encoding hardware. Further, the ability to instantly scale to the cloud for peak loads and to repurpose local hardware for alternate uses provides a killer combination of benefits, finally delivering on the promise of a virtualized infrastructure for broadcast video workflows.

Deploying containerized microservices

Whether you’re managing your own custom containerized microservices or leveraging containers from third-party vendors all are managed by an orchestrator, a piece of software which attributes resources and handles scheduling. Most any Docker orchestrator is sufficient as all systems are supported. Bitmovin uses Kubernetes as an orchestrator for our own infrastructure, so we are familiar with the system and know it in and out.

Prioritization in handling encoding jobs is another major challenge which impacts upon live content distribution as well as video on demand. Again, these requirements create an environment in which cloud-based video encoding using orchestrated containerization really shines: Encoding jobs can be prioritized between instances, jobs can be queued, stopped and resources shifted. Using this feature, encoding jobs can be sped up temporarily. For example if there is live content, which has to be processed practically in real time, other encoding jobs can be stopped to free up resources for the job with a higher priority.

You can configure your orchestration system along with your specific cloud environment to allow for a balance between cost efficiency and accelerated encoding. Resources can be freed up as soon as a time-critical encoding procedure has been finished. Big cloud providers are realizing the need for on-demand resource booking and flexible price structures and adjust accordingly, like Amazon’s AWS with their spot instances or Google’s preemptible VM instances.

Closing

Containerization of microservices is a truly groundbreaking application delivery mechanism, finally allowing content publishers to implement the long promised dream of video infrastructure virtualization in a cost effective and efficient manner. It’s about time you started thinking inside the box — containers are going to be your friend.

We’re here to help as you plan your next generation online video workflow. Use us and abuse us for both our video and cloud infrastructure technical geekery.

Resources:

https://bitmovin.com/cool-new-video-tools-five-encoding-advancements-coming-av1/

http://www.businessinsider.de/amazon-web-services-is-battling-microsoft-azure-and-google-cloud-2017-10?r=UK&IR=T

https://bitmovin.com/containerized-video-encoding-will-change-video-landscape/

https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/using-spot-instances.html

https://cloud.google.com/compute/docs/instances/preemptible