NVIDIA GTC Video Streaming Workflow Highlights

NVIDIA GTC (GPU-Technology Conference) is an annual conference with training and exhibition for all aspects of GPU(Graphics Processing Unit) accelerated computing. GTC 2024 was held in March with the tagline “The Conference for the Era of AI” and as expected, generative AI was a huge focus this year. There were also several other emerging applications of AI including advanced robotics, autonomous vehicles, climate modeling and new drug discovery.

When GPUs were first introduced, they were mainly used for rendering graphics in video game systems. In the mid-late ‘90s, NVIDIA’s programmable GPUs opened up new possibilities for accelerated video decoding and transcoding workflows. Even though GPUs may now be more associated with powering AI solutions, they still play an important role for many video applications and there were several sessions and announcements covering the latest video-related updates at GTC24. Keep reading to learn more about the highlights.

Video technology updates

In a session titled NVIDIA GPU Video Technologies: New Features, Improvements, and Cloud APIs, Abhijit Patait, Sr. Director of Multimedia and AI at NVIDIA, shared the latest updates and new features available for processing video with their GPUs. Some highlights that are now available in NVIDIA’s Video Codec SDK 12.2:

- 15% quality improvement for HEVC encoding, thanks to several enhancements:

- UHQ (Ultra-high quality) tuning info for latency-tolerant use cases

- Increased lookahead analysis

- Temporal filtering for noise reduction

- Unidirectional B-frames for latency-sensitive use cases

- Encode 8-bit content as 10-bit for higher quality (HEVC and AV1)

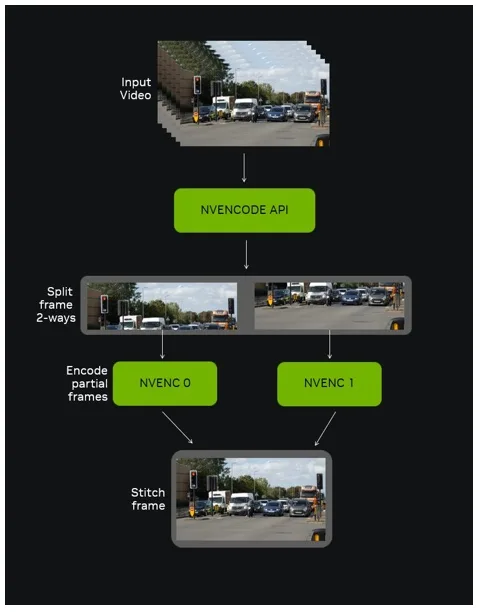

There were also several “Connect with Experts” sessions held where attendees could meet and ask questions of various NVIDIA subject matter experts. In the Building Efficient Video Transcoding Pipelines Enabling 8K session, they shared how multiple NVENC instances can be used in parallel for split-frame encoding to speed up 8K transcoding workflows. This topic is also covered in detail in their developer blog here.

VMAF-CUDA: Faster video quality analysis

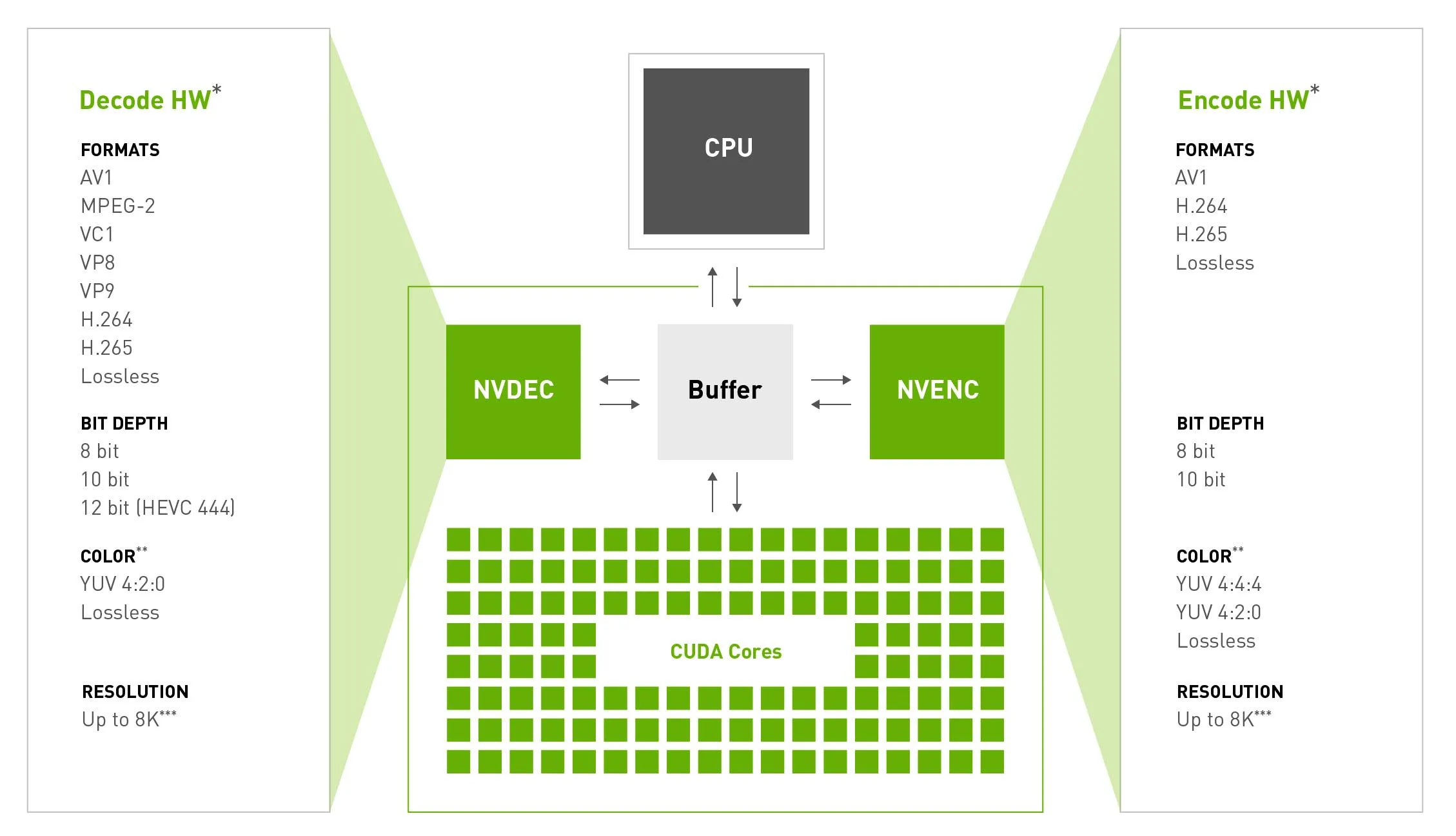

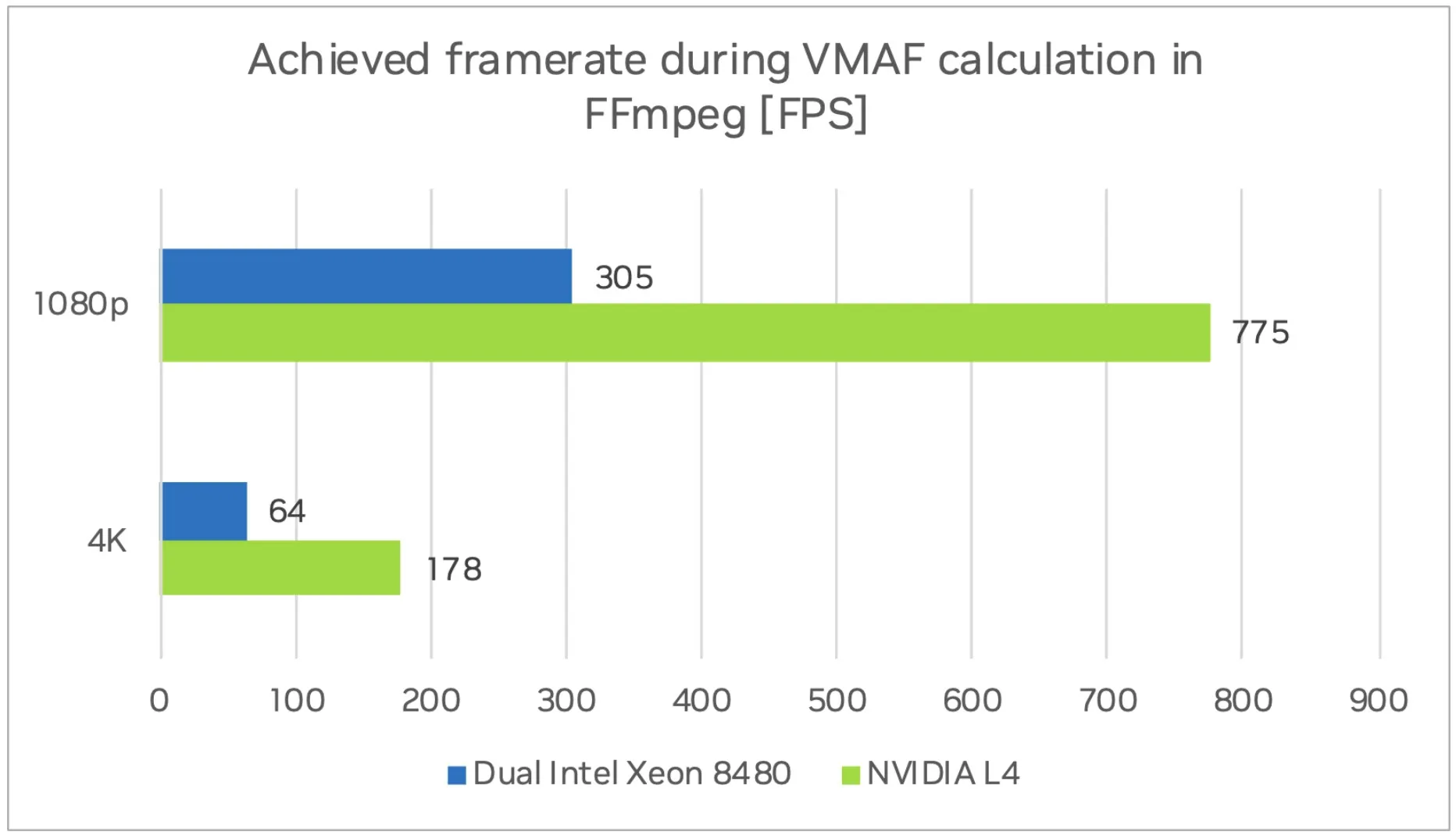

Snap and NVIDIA gave a joint presentation around a collaborative project they worked on (including participation from Netflix) to optimize and implement VMAF (Video Multi-Method Assessment Fusion) quality calculations on NVIDIA CUDA cores. CUDA (Compute Unified Device Architecture) cores are general-purpose processing units available on NVIDIA GPUs that allow for parallel processing and applications that are complementary to the dedicated GPU circuits.

During the talk, they explained how implementing VMAF-CUDA enabled Snap to run their video quality assessments in parallel to the transcoding being done on NVIDIA GPUs. The new method runs several times faster and more efficiently than running VMAF on CPU instances. It was so successful that Snap is now planning to transition all VMAF calculations to GPUs, even for transcoding workflows that are CPU-based. They also published the technical details in this blog post for those interested in learning more.

Netflix Vision AI workflows

In a joint presentation by Netflix and NVIDIA, Streamed Video Processing for Cloud-Scale Vision AI Services, they shared how Netflix is using computer vision and AI at scale throughout their stack. Netflix is a bit unique not only in their massive scale, but also that they are vertically integrated and have people working on every part of the chain from content creation through distribution. This opens a lot of opportunities for using AI along with the challenge of deploying solutions at scale.

They shared examples from:

- Pre-production: Storyboarding, Pre-visualization

- Post-production: QC, Compositing and visual fx, Video search

- Promotional media: Generating multi-format artwork, posters, trailers; Synopsis

- Globalization/localization of content: Multi-language subtitling and dubbing

They also discussed the pros and cons of using an off-the-shelf framework like NVIDIA’s DeepStream SDK for computer vision workflows (ease of use, efficiency of set up) vs building your own modular workflow (customization, efficiency of use) with components like CV-CUDA Operators for pre- and post-processing of images and TensorRT for deep-learning inference.

They also went into some detail on one application of computer vision in the post-production process, where they used object detection to identify when the clapperboard appeared in footage and sync the audio with the moment it closed, with sub-frame precision. This is something that has been a tedious, manual process for editors for decades in the motion picture industry and now they are able to automate it with consistent, precise results. While this is really more on the content creation side, it’s not hard to imagine how this same method could be used for automating some QA/QC processes for those on the content processing and distribution side.

Ready to try GPU encoding in the cloud?

Bitmovin VOD Encoding now supports the use of NVIDIA GPUs for accelerated video transcoding. Specifically, we use NVIDIA T4 GPUs on AWS EC2 G4dn instances, which are now available to our customers simply by using our VOD_HARDWARE_SHORTFORM preset. This enables incredibly fast turnaround times using both H.264 and H.265 codecs. For time-critical short form content like sports highlights and news clips, it can make a huge difference. You can get started today with a Bitmovin trial and see the results for yourself.

Related Links

Blog: GPU Acceleration for cloud video encoding

Guide: How to create an encoding using hardware acceleration

Data Sheet: Raise the bar for short form content