The 123rd MPEG meeting concluded on July 20, 2018 in Ljubljana, SI with the following topics:

- MPEG issues call for evidence on compressed representation of neural networks

- Network-Based Media Processing (NBMP) – MPEG evaluates responses to call for proposal and kicks off its technical work

- MPEG finalizes 1st edition of technical report on architectures for immersive media

- MPEG releases software for MPEG-I visual 3DoF+ objective quality assessment

- MPEG enhances ISO Base Media File Format (ISOBMFF) with new features

The corresponding press release of the 123rd MPEG meeting can be found here: http://mpeg.chiariglione.org/meetings/123

MPEG issues call for evidence on compressed representation of neural networks

Artificial intelligence (AI) including — but not limited to — Artificial neural networks have been adopted for a broad range of tasks in multimedia analysis, retrieval, and processing, media coding, data analytics, translation services and many other fields. Their recent success is based on the feasibility of processing much larger and complex neural networks (deep neural networks, DNNs) than in the past, and the availability of large-scale training data sets. As a consequence, trained neural networks contain a large number of parameters (weights), resulting in a large size (e.g., several hundred MBs). Many applications require the deployment of a particular trained network instance, potentially to a larger number of devices, which may have limitations in terms of network bandwidth, processing power, and memory (e.g., mobile devices or smart cameras). Any use case, in which a trained neural network (and its updates) needs to be deployed to a number of devices could thus benefit from a standard for the compressed representation of neural networks.

At its 123rd meeting, MPEG has issued a Call for Evidence (CfE) for compression technology for neural networks. The compression technology will be evaluated in terms of compression efficiency, runtime, and memory consumption and the impact on performance in three use cases: (i) visual object classification, (ii) visual feature extraction (as used in MPEG Compact Descriptors for Visual Analysis) and (iii) filters for video coding. Responses to the CfE will be analyzed on the weekend prior to and during the 124th MPEG meeting in October 2018 (Macau, CN).

Network-Based Media Processing (NBMP) – MPEG evaluates responses to call for proposal and kicks off its technical work

Recent developments in multimedia have brought significant innovation and disruption to the way multimedia content is created and consumed. At its 123rd meeting, MPEG analyzed the technologies submitted by eight industry leaders as responses to the Call for Proposals (CfP) for Network-Based Media Processing (NBMP, MPEG-I Part 8). These technologies address advanced media processing use cases such as network stitching for virtual reality (VR) services, super-resolution for enhanced visual quality, transcoding by a mobile edge cloud, or viewport extraction for 360-degree video within the network environment. NBMP allows service providers and end users to describe media processing operations that are to be performed by the entities in the networks. NBMP will describe the composition of network-based media processing services out of a set of NBMP functions and makes these NBMP services accessible through Application Programming Interfaces (APIs).

NBMP will support the existing delivery methods such as streaming, file delivery, push-based progressive download, hybrid delivery, and multipath delivery within heterogeneous network environments. MPEG issued a Call for Proposal (CfP) seeking technologies that allow end-user devices, which are limited in processing capabilities and power consumption, to offload certain kinds of processing to the network.

After a formal evaluation of submissions, MPEG selected three technologies as starting points for the (i) workflow, (ii) metadata, and (iii) interfaces for static and dynamically acquired NBMP. A key conclusion of the evaluation was that NBMP can significantly improve the performance and efficiency of the cloud infrastructure and media processing services.

MPEG finalizes 1st edition of technical report on architectures for immersive media

At its 123nd meeting, MPEG finalized the first edition of its Technical Report (TR) on architectures for immersive media. This report constitutes the first part of the MPEG-I standard for the coded representation of immersive media and introduces the eight MPEG-I parts currently under specification in MPEG. In particular, it addresses three Degrees of Freedom (3DoF; three rotational and un-limited movements around the X, Y and Z axes (respectively pitch, yaw and roll)), 3DoF+ (3DoF with additional limited translational movements (typically, head movements) along X, Y and Z axes), and 6DoF (3DoF with full translational movements along X, Y and Z axes) experiences but it mostly focuses on 3DoF. Future versions are expected to cover aspects beyond 3DoF. The report documents use cases and defines architectural views on elements that contribute to an overall immersive experience. Finally, the report also includes quality considerations for immersive services and introduces minimum requirements as well as objectives for a high-quality immersive media experience.

MPEG releases software for MPEG-I visual 3DoF+ objective quality assessment

MPEG-I visual is an activity that addresses the specific requirements of immersive visual media for six degrees of freedom virtual walkthroughs with correct motion parallax within a bounded volume (3DoF+). MPEG-I visual covers application scenarios from 3DoF+ with slight body and head movements in a sitting position to 6DoF allowing some walking steps from a central position. At the 123nd MPEG meeting, an important progress has been achieved in software development. A new Reference View Synthesizer (RVS 2.0) has been released for 3DoF+, allowing to synthesize virtual viewpoints from an unlimited number of input views. RVS integrates code bases from Universite Libre de Bruxelles and Philips, who acted as software coordinator. A Weighted-to-Spherically-uniform PSNR (WS-PSNR) software utility, essential to 3DoF+ and 6DoF activities, has been developed by Zhejiang University. WS-PSNR is a full reference objective quality metric for all flavors of omnidirectional video. RVS and WS-PSNR are essential software tools for the upcoming Call for Proposals on 3DoF+ expected to be released at the 124th MPEG meeting in October 2018 (Macau, CN).

MPEG enhances ISO Base Media File Format (ISOBMFF) with new features

At the 123rd MPEG meeting, a couple of new amendments related to ISOBMFF has reached the first milestone. Amendment 2 to ISO/IEC 14496-12 6th edition will add the option to have relative addressing as an alternative to offset addressing, which in some environments and workflows can simplify the handling of files and will allow creation of derived visual tracks using items and samples in other tracks with some transformation, for example rotation. Another amendment reached its first milestone is the first amendment to ISO/IEC 23001-7 3rd edition. It will allow use of multiple keys to a single sample and scramble some parts of AVC or HEVC video bitstreams without breaking conformance to the existing decoders. That is, the bitstream will be decodable by existing decoders, but some parts of the video will be scrambled. It is expected that these amendments will reach the final milestone in Q3 2019.

What else happened at #MPEG123?

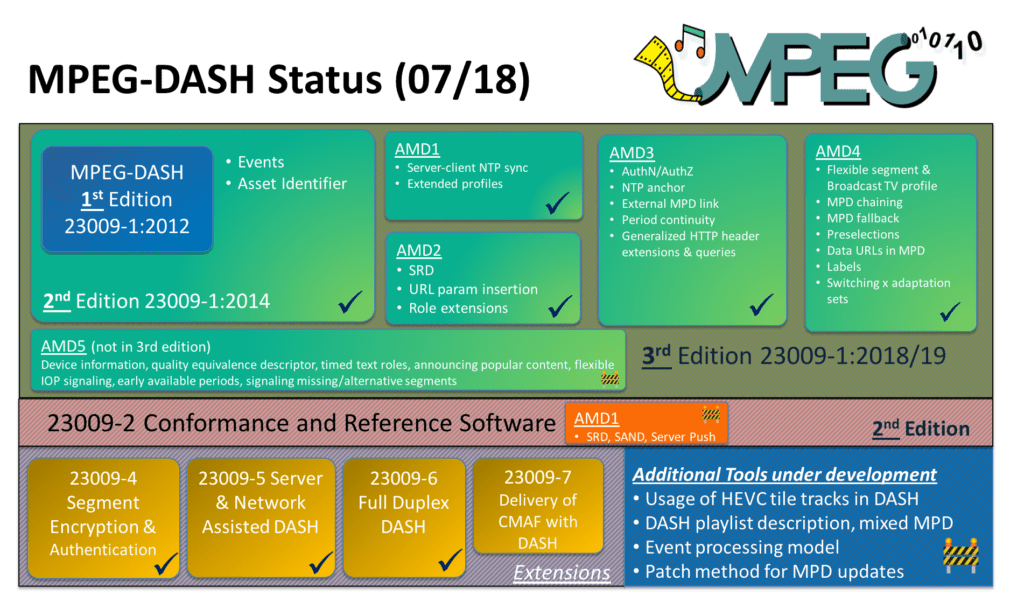

- The MPEG-DASH 3rd edition is finally available as output document (N17813; only available to MPEG members) combining 2nd edition, four amendments, and 2 corrigenda. We expect final publication later this year or early next year.

- There is a new DASH amendment and corrigenda items in pipeline which should progress to final stages also some time next year. The status of MPEG-DASH (July 2018) can be seen below.

- MPEG received a rather interesting input document related to “streaming first” which resulted into a publicly available output document entitled “thoughts on adaptive delivery and access to immersive media”. The key idea here is to focus on streaming (first) rather than on file/encapsulation formats typically used for storage (and streaming second).

- Since a couple of meetings, MPEG maintains a standardization roadmap highlighting recent/major MPEG standards and documenting the roadmap for the next five years. It definitely worth keeping this in mind when defining/updating your own roadmap.

- JVET/VVC issued Working Draft 2 of Versatile Video Coding (N17732 | JVET-K1001) and Test Model 2 of Versatile Video Coding (VTM 2) (N17733 | JVET-K1002). Please note that N-documents are MPEG internal but JVET-documents are publicly accessible here: http://phenix.it-sudparis.eu/jvet/. An interesting aspect is that VTM2/WD2 should have >20% rate reduction compared to HEVC, all with reasonable complexity and the next benchmark set (BMS) should have close to 30% rate reduction vs. HEVC. Further improvements expected from (a) improved merge, intra prediction, etc., (b) decoder-side estimation with low complexity, (c) multi-hypothesis prediction and OBMC, (d) diagonal and other geometric partitioning, (e) secondary transforms, (f) new approaches of loop filtering, reconstruction and prediction filtering (de-noising, non-local, diffusion based, bilateral, etc.), (g) current picture referencing, palette, and (h) neural networks.

- In addition to VVC — which is a joint activitiy with VCEG — MPEG is working on two video-related exploration activities, namely (a) an enhanced quality profile of the AVC standard and (b) a low complexity enhancement video codec. Both topics will be further discussed within respective Ad-hoc Groups (AhGs) and further details are available here: http://mpeg.chiariglione.org/meetings/123.

- Finally, MPEG established an Ad-hoc Group (AhG) dedicated to the long-term planning which is also looking into application areas/domains other than media coding/representation.

- The MPEG group celebrated 30 years at the 123rd meeting in Ljubljana with custom hoodies sponsored by Bitmovin. Check it out, modeled here by the MPEG President Leonardo Chiariglione with Bitmovin’s Chief Scientist Christian Timmerer.