Adaptation Based on Video Context or “Per-Scene Adaptation”, reduces bandwidth consumption by adjusting the bitrate stream to the minimum bitrate required to maintain perfect visual quality for every segment within your video.

Since Netflix began customizing their encoding ladder in order to optimize each of their titles individually, the idea of “Per-Title Encoding” has been quite well understood. To put it simply, videos with low levels of complexity are encoded with a lower bitrate, and those with higher levels of complexity, at a higher bitrate. This either saves on bandwidth or improves visual quality, and can be an effective method of reducing bandwidth consumption. But what if this concept could be applied to individual scenes within a single title? With any given video, visual complexity varies from scene to scene. These variations create an opportunity to optimize a video based on the content of each scene. For example, many low complexity scenes can be streamed at a lower bitrate without any noticeable loss of visual quality. This provides the opportunity to significantly reduce bandwidth without any perceivable loss of quality.

A Plea for Quality

Anyone working in video knows that along with fast startup and avoiding stalls (buffering), video quality is a key factor in satisfying your audience. Meeting these three requirements has been a frontline topic in video development for many years and has led to the development of a range of approaches.

A common example that illustrates these challenges is bitrate ladders, or more specifically the bitrate/resolution combination used for encoding and how they are defined. Based on various studies and user acceptance tests, encoding profiles are defined to provide “good” quality for every single video. But the question “Is 5800 kbps enough to deliver excellent 1080p encodes?” can and should(!) not be answered with a simple “Yes” or “No”. Due to a complexity mismatch of different content types (e.g., Cartoons vs. action movies) the encoding recipe has to be adjusted accordingly, as “One-size-fits-all” bitrate ladders are not sufficient. One arising method to deal with this is called “Per-Title Encoding”. Without going into too much detail, by using “Per-Title Encoding” the encoding settings will be adjusted and optimized depending on the content and its encoding complexity.

Although using “Per-Title Encoding” is a step in the right direction and can increase QoE or help publishers save bandwidth, we asked ourselves the questions if a similar approach can also be applied on every individual scene?

Per-Scene Adaptation

Although the encoding profile can be adapted to the content, even the most action-packed movie contains scenes that are less complex. So in virtually every video there are many low complex scenes that can potentially be streamed with a lower bitrate, without decreasing quality. To enable a context based optimization for every scene, we developed an approach where the client is aware of the video content (and it’s complexity) in addition to its bitrate.

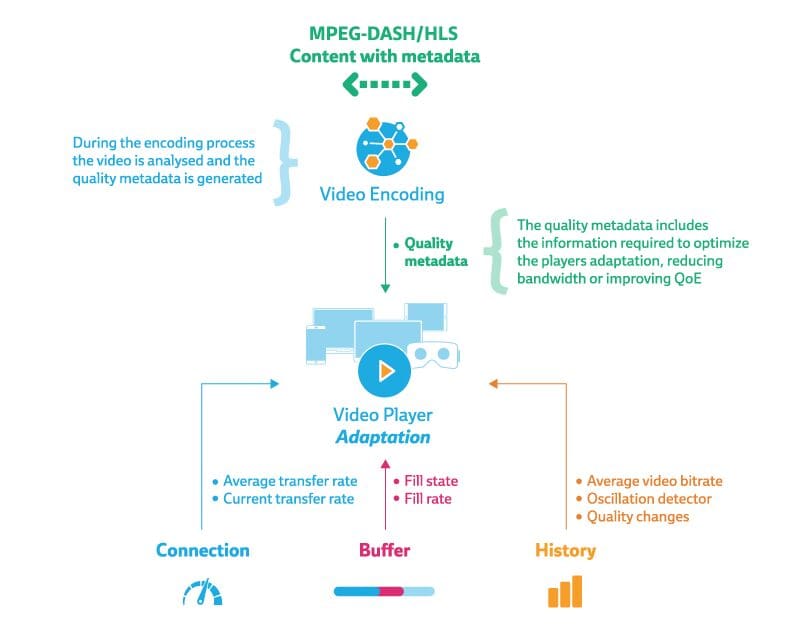

Commonly used adaptive video players utilize various information to make adaptation decisions. This might include (but is not limited to) connection data, like current throughput, buffer fill states, history information, or even user preferences.

As it can be seen in the above graphic, in addition to those traditional metrics, Per-Scene Adaptation adds quality metadata which is extracted during the encoding process. Using this important information the client and its adaptation component will be able to make more precise and efficient decisions.

How it Works

To establish a successful Per-Scene Adaptation setup a few steps need to be accomplished on the server as well as on the client-side. First of all quality information has to be extracted during the encoding on a per-chunk basis. The goal of such information is to illustrate perceptual quality as accurately as possible. Various different metrics like PSNR, SSIM, VMAF, etc. exist. We at Bitmovin make use of a combination of different metrics and derive a so called “quality index”, which will be encapsulated and delivered with the content itself. For delivery, different options are available, including: i) use a sidecar file ii) add the information in the playlist/manifest or iii) embed it directly in the a/v container.

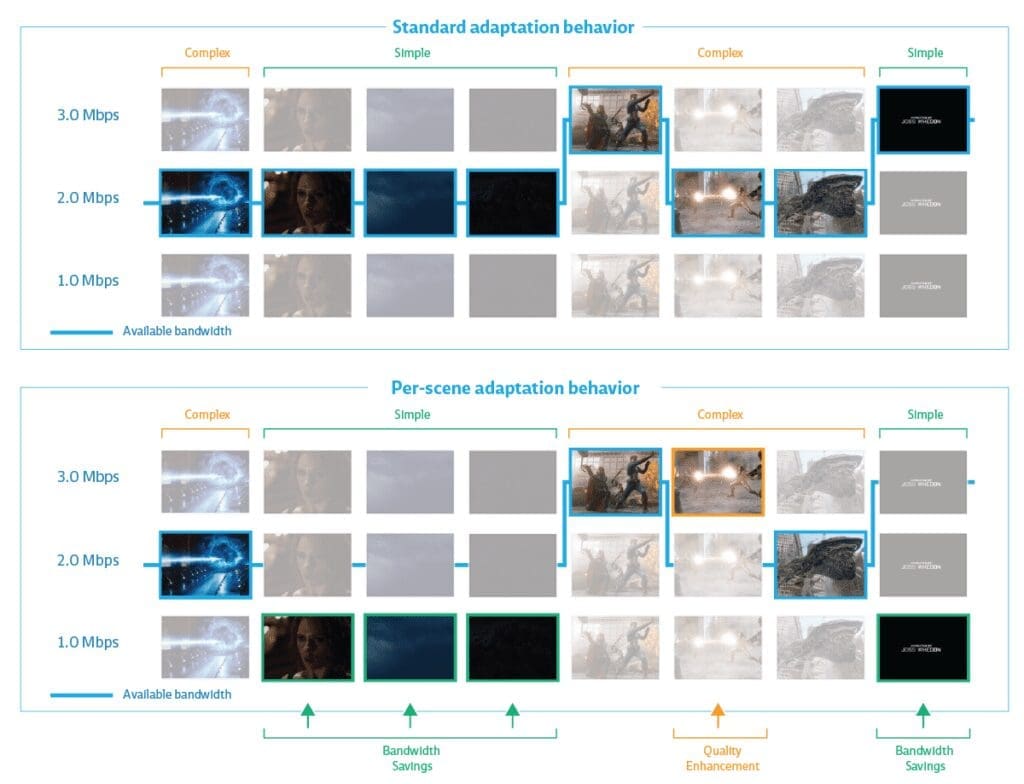

At the streaming client the quality metadata is extracted, processed and forwarded to its adaptation logic component. With the help of this extra piece of information, the adaptation algorithm is now able to “replace” low complexity scenes by lower bitrate segments, while maintaining the same perceptual quality. The below graph illustrates this behavior.

While a traditional algorithm (without content quality information) would maintain the 2.0 Mbps quality (see above graphic) even for “simple” scenes, the quality aware algorithm can switch down to 1.0 Mbps. This behavior allows the player to save bandwidth, without decreasing the QoE of viewers.

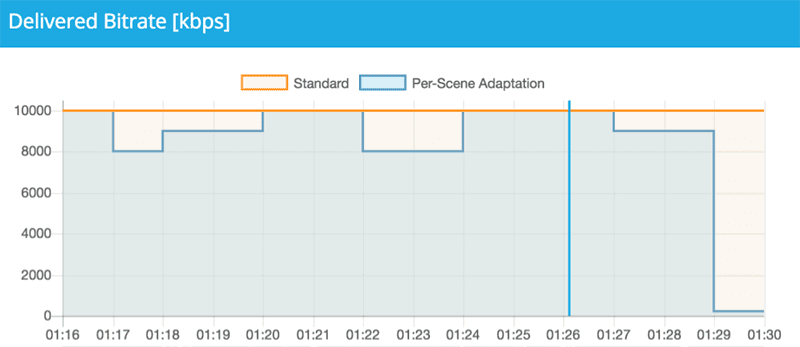

The below bandwidth graph, recorded during playback of high motion sports content, highlights this behavior even further. As you can see, the standard adaptation logic maintains a high quality level during the entire sequence and across all scenes. In contrast, using quality metadata, it is possible to make use of lower bitrate segments for certain scenes and still deliver the same perceptual quality.

Saving Potential

As already stated, the saving potential of such an adaptation approach is highly coupled to the complexity of the scenes of a video and thus the content itself. To get a better understanding of the potential savings, we performed an evaluation with three different content types: sports, nature and animation.

As representative of the sports category, we used a 3:30 long clip of a parkour (Freerunning) competition. Characteristic for this kind of videos, are high motion scenes and frequent cuts – complex to encode in general. In contrast to that, we also used a 2:08 minutes shot of an abandoned town in the desert, with less camera movement and only a few cuts. The third short clip was an animated video with 2:30 minutes length and contains both, high as well as low motion scenes.

To ensure a fair comparison we used the same bitrate ladder for all three content types, containing 8 SD and 3 HD quality levels, with bitrates from 240 kbps to 7 Mbps. The average bandwidth consumption has been recorded over three consecutive runs, which have been performed in a stable bandwidth environment. It comes as no surprise that the saving potential for the sport content is very limited and around 3% in total (exactly 3.11%). Whereas the animated content shows decent savings, namely 14.06%, our per-scene based adaptation approach is able to save up to 30% (27.80%) when streaming the nature video without any perceptual loss of quality.

Additional Benefits

Besides the advantage of providing the same or better quality while consuming less bandwidth – using quality metadata for ABR decisions avoids stalls, as high bitrate scenes can be predicted well in advance. Clients which are not able to read the quality information can still playback the content – of course without the named benefits.

Our context based approach enables the usage of Per-Scene Adaptation without restrictions on dedicated codecs and/or container formats.

Conclusion

In today’s online video streaming scenarios, we see a shift away from pure “One-size-fits-all” bitrate ladders, which do not distinguish between different types of content. Since Netflix started using “Per-Title Encoding” the concept of optimizing the encoding recipe based on content and its encoding complexity is on the increase. By introducing Per-Scene Adaptation, we even made one step further: Save bandwidth for every single scene. Our client-based approach takes quality metadata, gathered during the encoding process, into account to make smarter adaptation decisions. While maintaining the same perceptual quality for the viewer, it consumes up to 30% less bandwidth and avoids stalls.

Video technology guides and articles

- Back to Basics: Guide to the HTML5 Video Tag

- What is a VoD Platform?A comprehensive guide to Video on Demand (VOD)

- Video Technology [2022]: Top 5 video technology trends

- HEVC vs VP9: Modern codecs comparison

- What is the AV1 Codec?

- Video Compression: Encoding Definition and Adaptive Bitrate

- What is adaptive bitrate streaming

- MP4 vs MKV: Battle of the Video Formats

- AVOD vs SVOD; the “fall” of SVOD and Rise of AVOD & TVOD (Video Tech Trends)

- MPEG-DASH (Dynamic Adaptive Streaming over HTTP)

- Container Formats: The 4 most common container formats and why they matter to you.

- Quality of Experience (QoE) in Video Technology [2022 Guide]