Preface

Bitmovin is a proud member and contributor to several organizations working to shape the future of video, none for longer than the Moving Pictures Expert Group (MPEG), where I along with a few senior developers at Bitmovin are active members. Personally, I have been a member and attendant of MPEG for 15+ years and have been documenting the progress since early 2010. Today, we’re working hard to further improve the capabilities and efficiency of the industry’s newest standards, such VVC, LCEVC, and MIV.

The 139th MPEG Meeting – MPEG issues Call for Evidence to drive the future of computer vision and smart transportation

The past few months of research and progression in the world of video standards setting at MPEG (and Bitmovin alike) have been quite busy and though we didn’t publish a quarterly blog for the 138th MPEG meeting, it’s worth sharing again that MPEG was awarded two Technology & Engineering Emmy® Awards for its MPEG-DASH and Open Font Format standards. The latest developments in the standards space have expectedly been focused around improvements to VVC & LCEVC, however, there have also been recent updates made to CMAF and progress with energy efficiency standards and immersive media codecs. I’ve addressed most of the recent updates. The official press release of the 139th MPEG meeting can be found here and comprises the following items:

- MPEG Issues Call for Evidence for Video Coding for Machines (VCM)

- MPEG Ratifies the Third Edition of Green Metadata, a Standard for Energy-Efficient Media Consumption

- MPEG Completes the Third Edition of the Common Media Application Format (CMAF) by adding Support for 8K and High Frame Rate for High Efficiency Video Coding

- MPEG Scene Descriptions adds Support for Immersive Media Codecs

- MPEG Starts New Amendment of VSEI containing Technology for Neural Network-based Post Filtering

- MPEG Starts New Edition of Video Coding-Independent Code Points Standard

- MPEG White Paper on the Third Edition of the Common Media Application Format

In this report, I’d like to focus on VCM, Green Metadata, CMAF, VSEI, and a brief update about DASH (as usual).

Video Coding for Machines (VCM)

MPEG’s exploration work on Video Coding for Machines (VCM) aims at compressing features for machine-performed tasks such as video object detection and event analysis. As neural networks increase in complexity, architectures such as collaborative intelligence, whereby a network is distributed across an edge device and the cloud, become advantageous. With the rise of newer network architectures being deployed amongst a heterogenous population of edge devices, such architectures bring flexibility to systems implementers. Due to such architectures, there is a need to efficiently compress intermediate feature information for transport over wide area networks (WANs). As feature information differs substantially from conventional image or video data, coding technologies and solutions for machine usage could differ from conventional human-viewing-oriented applications to achieve optimized performance. With the rise of machine learning technologies and machine vision applications, the amount of video and images consumed by machines has rapidly grown.

Typical use cases include intelligent transportation, smart city technology, intelligent content management, etc., which incorporate machine vision tasks such as object detection, instance segmentation, and object tracking. Due to the large volume of video data, extracting and compressing the feature from a video is essential for efficient transmission and storage. Feature compression technology solicited in this Call for Evidence (CfE) can also be helpful in other regards, such as computational offloading and privacy protection.

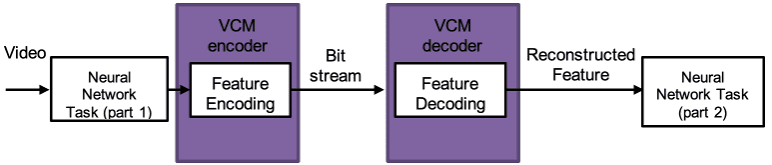

Over the last three years, MPEG has investigated potential technologies for efficiently compressing feature data for machine vision tasks and established an evaluation mechanism that includes feature anchors, rate-distortion-based metrics, and evaluation pipelines. The evaluation framework of VCM depicted below comprises neural network tasks (typically informative) at both ends as well as VCM encoder and VCM decoder, respectively. The normative part of VCM typically includes the bitstream syntax which implicitly defines the decoder whereas other parts are usually left open for industry competition and research.

Further details about the CfP and how interested parties are able to respond can be found in the official press release here.

Green Metadata

MPEG Systems has been working on Green Metadata for the last ten years to enable the adaptation of the client’s power consumption according to the complexity of the bitstream. Many modern implementations of video decoders can adjust their operating voltage or clock speed to adjust the power consumption level according to the required computational power. Thus, if the decoder implementation knows the variation in the complexity of the incoming bitstream, then the decoder can adjust its power consumption level to the complexity of the bitstream. This will allow less energy use in general and extended video playback for the battery-powered devices.

The third edition enables support for Versatile Video Coding (VVC, ISO/IEC 23090-3, a.k.a. ITU-T H.266) encoded bitstreams and enhances the capability of this standard for real-time communication applications and services. While finalizing the support of VVC, MPEG Systems has also started the development of a new amendment to the Green Metadata standard, adding the support of Essential Video Coding (EVC, ISO/IEC 23094-1) encoded bitstreams.

Making video coding and systems sustainable and environmentally-friendly will become a major issue in the years to come, specifically since more and more video services become available. However, we need a holistic approach considering all entities from production to consumption and Bitmovin is committed to contribute its share to these efforts.

Third Edition of Common Media Application Format (CMAF)

The third edition of CMAF adds two new media profiles for High Efficiency Video Coding (HEVC, ISO/IEC 23008-2, a.k.a. ITU-T H.265), namely for (i) 8K and (ii) High Frame Rate (HFR). Regarding the former, the media profile supporting 8K resolution video encoded with HEVC (Main 10 profile, Main Tier with 10 bits per colour component) has been added to the list of CMAF media profiles for HEVC. The profile will be branded as ‘c8k0’ and will support videos with up to 7680×4320 pixels (8K) and up to 60 frames per second. Regarding the latter, another media profile has been added to the list of CMAF media profiles, branded as ‘c8k1’ and supports HEVC encoded video with up to 8K resolution and up to 120 frames per second. Finally, chroma location indication support has been added to the 3rd edition of CMAF.

CMAF is an integral part of the video streaming system and enabler for (live) low-latency streaming. Bitmovin and its co-funded research lab ATHENA significantly contributed to enable (live) low latency streaming use cases through our joint solution with Akamai for chunked CMAF low latency delivery as well as our research projects exploring the challenges of real-world deployments and the best methods to optimize those implementations.

New Amendment for Versatile Supplemental Enhancement Information (VSEI) containing Technology for Neural Network-based Post Filtering

At the 139th MPEG meeting, the MPEG Joint Video Experts Team with ITU-T SG 16 (WG 5; JVET) issued a Committee Draft Amendment (CDAM) text for the Versatile Supplemental Enhancement Information (VSEI) standard (ISO/IEC 23002-7, a.k.a. ITU-T H.274). Beyond the SEI message for shutter interval indication, which is already known from its specification in Advanced Video Coding (AVC, ISO/IEC 14496-10, a.k.a. ITU-T H.264) and High Efficiency Video Coding (HEVC, ISO/IEC 23008-2, a.k.a. ITU-T H.265), and a new indicator for subsampling phase indication which is relevant for variable-resolution video streaming, this new amendment contains two Supplemental Enhancement Information (SEI) messages for describing and activating post filters using neural network technology in video bitstreams. This could reduce coding noise, upsampling, colour improvement, or denoising. The description of the neural network architecture itself is based on MPEG’s neural network coding standard (ISO/IEC 15938-17). Results from an exploration experiment have shown that neural network-based post filters can deliver better performance than conventional filtering methods. Processes for invoking these new post-processing filters have already been tested in a software framework and will be made available in an upcoming version of the Versatile Video Coding (VVC, ISO/IEC 23090-3, a.k.a. ITU-T H.266) reference software (ISO/IEC 23090-16, a.k.a. ITU-T H.266.2).

Neural network-based video processing (incl. coding) is gaining momentum and end user devices are becoming more and more powerful for such complex operations. Bitmovin and its co-funded research lab ATHENA investigated and researched such options; recently proposed LiDeR, a lightweight dense residual network for video super resolution on mobile devices that can compete with other state-of-the-art neural networks, while executing ~300% faster.

The latest MPEG-DASH Update

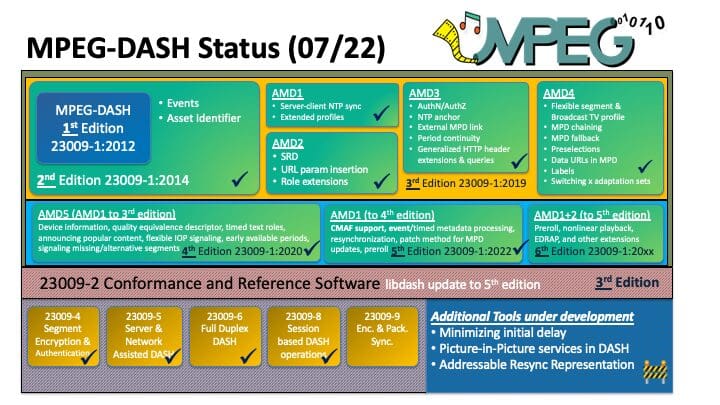

Finally, I’d like to provide a brief update on MPEG-DASH! At the 139th MPEG meeting, MPEG Systems issued a new working draft related to Extended Dependent Random Access Point (EDRAP) streaming and other extensions which it will be further discussed during the Ad-hoc Group (AhG) period (please join the dash email list for further details/announcements). Furthermore, Defects under Investigation (DuI) and Technologies under Consideration (TuC) have been updated. Finally, a new part has been added (ISO/IEC 23009-9) which is called encoder and packager synchronization for which also a working draft has been produced. Publicly available documents (if any) can be found here.

An updated overview of DASH standards/features can be found in the Figure below.

The next meeting will be face-to-face in Mainz, Germany from October 24-28, 2022. Further details can be found here.

Click here for more information about MPEG meetings and their developments.

Have any questions about the formats and standards described above? Do you think MPEG is taking the first step toward enabling Skynet and Terminators by advancing video coding for machines? 🦾 Check out Bitmovin’s Video Developer Community and let us know your thoughts.

Looking for more info on streaming formats and codecs? Here are some useful resources:

- [E-Book] Ultimate Guide to Container Formats

- [Blog] Live Low Latency Streaming Tech Deep Dive

- [Guide] Practical Guide to HDR