Preface

Bitmovin isn’t the only organization whose sole purpose is to shape the future of video – a few senior developers at Bitmovin along with me are active members of the Moving Pictures Expert Group (MPEG). Personally, I have been a member and attendant of MPEG for 15+ years and have been documenting the progress since early 2010. Today, we’re working hard to further improve the capabilities and efficiency of the industry’s newest standards, such as VVC and MIV.

The 135th MPEG Meeting

The 135th MPEG Meeting was defined by the development of truly “future-like” video experiences. As we gathered for (hopefully) the second to last time in a virtual-only setting ahead of our next meeting in late October, this group of video experts came together to improve what was historically considered the future of virtual content: Immersive Video experiences. As the weekend came to a close we focused on progressing two primary initiatives:

- Helping bring VVC to market

- Creating a standardized definition for how immersive video experiences (such as multi-view) should be handled during transmission.

As such the working group made significant testing progress for VVC to verify its effectiveness and MPEG Immersive Video (MIV) was officially moved into the final stage before it’s approved.

The official press release can be found here and comprises the following items:

- MPEG Video Coding promotes MPEG Immersive Video (MIV) to the FDIS stage

- Verification tests for more application cases of Versatile Video Coding (VVC)

- MPEG Systems reaches the first milestone for Video Decoding Interface for Immersive Media

- MPEG Systems further enhances the extensibility and flexibility of Network-based Media Processing

- MPEG Systems completes support of Versatile Video Coding and Essential Video Coding in High-Efficiency Image File Format

- Two MPEG White Papers:

- Versatile Video Coding (VVC)

- MPEG-G and its application of regulation and privacy

In this report, I’d like to focus on MIV and VVC including systems-related aspects as well as a brief update about DASH (as usual).

MPEG Immersive Video (MIV)

At the 135th MPEG meeting, MPEG Video Coding subgroup has promoted the MPEG Immersive Video (MIV) standard to the Final Draft International Standard (FDIS) stage. MIV was developed to support the compression of immersive video content in which multiple real or virtual cameras capture a real or virtual 3D scene. The standard enables storage and distribution of immersive video content over existing and future networks for playback with 6 Degrees of Freedom (6DoF) of view position and orientation.

From a technical point of view, MIV is a flexible standard for multiview video with depth (MVD) that leverages the strong hardware support for commonly used video codecs to code volumetric video. The actual views may choose from three projection formats: (i) equirectangular, (ii) perspective, or (iii) orthographic. By packing and pruning views, MIV can achieve bit rates around 25 Mb/s and a pixel rate equivalent to HEVC Level 5.2.

The MIV standard is designed as a set of extensions and profile restrictions for the Visual Volumetric Video-based Coding (V3C) standard (ISO/IEC 23090-5). The main body of this standard is shared between MIV and the Video-based Point Cloud Coding (V-PCC) standard (ISO/IEC 23090-5 Annex H). It may potentially be used by other MPEG-I volumetric codecs under development. The carriage of MIV is specified through the Carriage of V3C Data standard (ISO/IEC 23090-10).

The test model and objective metrics are publicly available at https://gitlab.com/mpeg-i-visual.

At the same time, MPEG Systems has begun developing the Video Decoding Interface for Immersive Media (VDI) standard (ISO/IEC 23090-13) for video decoders’ input and output interfaces to provide more flexible use of the video decoder resources for such applications. At the 135th MPEG meeting, MPEG Systems has reached the first formal milestone of developing the ISO/IEC 23090-13 standard by promoting the text to Committee Draft ballot status. The VDI standard allows for dynamic adaptation of video bitstreams to provide the decoded output pictures in such a way so that the number of actual video decoders can be smaller than the number of the elementary video streams to be decoded. In other cases, virtual instances of video decoders can be associated with the portions of elementary streams required to be decoded. With this standard, the resource requirements of a platform running multiple virtual video decoder instances can be further optimized by considering the specific decoded video regions that are to be actually presented to the users rather than considering only the number of video elementary streams in use.

Immersive media applications and services offering various degrees of freedom are becoming more and more important. The Quality of Experience (QoE) for such applications is defined in a QUALINET white paper. Bitmovin actively supports application-oriented basic research in the context of the Christian Doppler Laboratory ATHENA, e.g., Objective and Subjective QoE Evaluation for Adaptive Point Cloud Streaming, From Capturing to Rendering: Volumetric Media Delivery With Six Degrees of Freedom, and SLFC: Scalable Light Field Coding.

Versatile Video Coding (VVC) updates

The third round of verification testing for Versatile Video Coding (VVC) has been completed. This includes the testing of High Dynamic Range (HDR) content of 4K ultra-high-definition (UHD) resolution using the Hybrid Log-Gamma (HLG) and Perceptual Quantization (PQ) video formats. The test was conducted using state-of-the-art high-quality consumer displays, emulating an internet streaming-type scenario.

On average, VVC showed on average approximately 50% bit rate reduction compared to High-Efficiency Video Coding (HEVC).

Additionally, the ISO/IEC 23008-12 Image File Format has been amended to support images coded using Versatile Video Coding (VVC) and Essential Video Coding (EVC).

VVC verification tests confirm a 50% bit rate reduction compared to its predecessor and as such, it is certainly considered a promising candidate for future deployments. Within Bitmovin we have successfully integrated current implementations of the VVC standard and in terms of licenses, it seems there’s a light at the end of the tunnel due to the recent announcement of Access Advance.

The latest MPEG-DASH Update

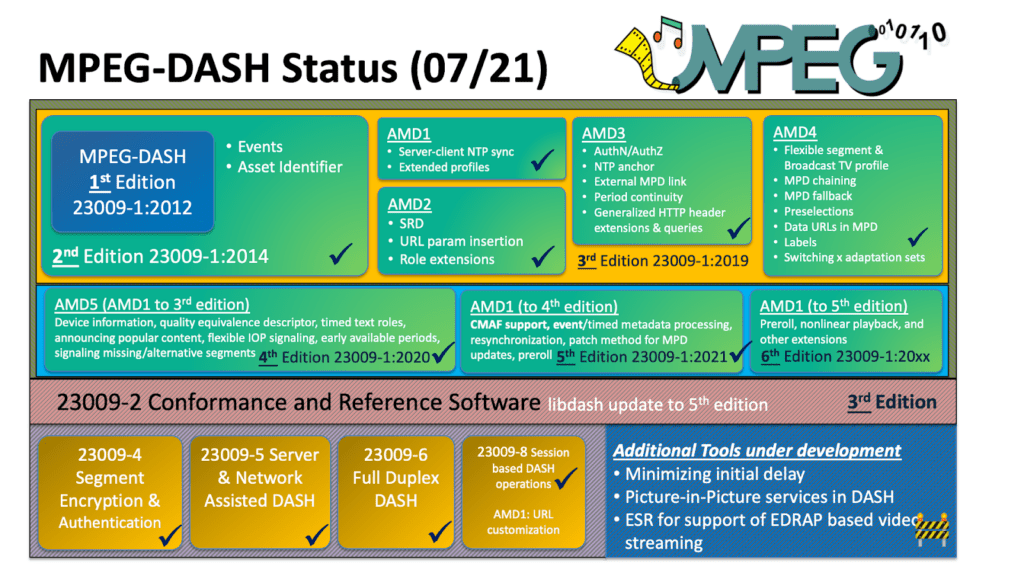

Finally, I’d like to provide a brief update on MPEG-DASH! At the 135th MPEG meeting, MPEG Systems issued a draft amendment to the core MPEG-DASH specification (i.e., ISO/IEC 23009-1) that provides further improvements of Preroll which is renamed to Preperiod and it will be further discussed during the Ad-hoc Group (AhG) period (please join the dash email list for further details/announcements). Additionally, this amendment includes some minor improvements for nonlinear playback. The so-called Technologies under Consideration (TuC) document comprises new proposals that did not yet reach consensus for promotion to any official standards documents (e.g., amendments to existing DASH standards or new parts). Currently, proposals for minimizing initial delay are discussed among others. Finally, libdash has been updated to support the MPEG-DASH schema according to the 5th edition.

An updated overview of DASH standards/features can be found in the Figure below.

The next meeting will be again an online meeting in October 2021 but MPEG is aiming to meet in person again in January 2021 (if possible).

Click here for more information about MPEG meetings and their developments