Introduction

At a recent internal hackathon, two of Bitmovin’s software engineers, Myriam Gantner and Thomas Sablattnig, explored whether AI could be used to process the large volume of data captured by Bitmovin Analytics into concise summaries and recommendations. The project was a success and is now being developed into a feature that will be available to Bitmovin customers. Keep reading to learn more about the new Analytics AI Session Interpreter.

Background and motivation

Bitmovin Analytics allows video developers and technicians to track, monitor and analyze their video streams in real-time. It provides insights into user behavior, video player performance and much more. While it’s a valuable companion for Bitmovin’s Encoding and Player products, it can also stand alone and be used with several open source and commercial video players. It has a dedicated dashboard for visual interpretation, but can also export data for your own custom dashboards in products like Grafana or Looker Studio.

Bitmovin Analytics collects a ton of data about the behavior and experience your customers have when watching videos, from simple metrics like play and pause duration to more technical information like video bitrate, DRM license exchange, adaptive bitrate switching and detailed logs around errors. There is a lot of information provided for both individual viewers and overall performance, so analysis can be time-consuming and sometimes overwhelming, especially if you don’t have a lot of technical or background knowledge about the entire video streaming workflow.

What if we could use AI to process all the data and cut through the noise? Could we make it easier to get to the source of streaming problems sooner? Could we make the insights provided more accessible to a wider audience that didn’t have technical expertise or streaming experience? Is it possible to build an analytics AI session interpreter with existing cloud resources? These are the questions we hoped to answer with this project. Keep reading to learn more about the process and results.

Using Google’s Vertex AI + PaLM 2 models

Google’s Vertex AI

Vertex AI is a managed machine learning (ML) platform that leverages Google Cloud’s infrastructure and tools for building and training ML models. It has AutoML capabilities for quick and easy deployment, but also supports custom training for more advanced users and workflows. It integrates with other Google Cloud services like BigQuery and Pub/Sub for end-to-end intelligent data processing and analytics AI workflows.

PaLM 2

PaLM 2 is Google’s next generation large language model that was released mid-2023. It excels at reasoning tasks and can decompose a complex task into simpler subtasks. It understands nuances of human language better than previous models and can perform multilingual translations, even when idioms or ambiguous and figurative language are used.

Initial results

Once the initial workflow and connections were established, the next step was to see how smart it was out of the box. We began by feeding it the analytics data from a streaming session.

The question: “Can you please analyze this video streaming session?”

The answer:

The first event is a play event, which indicates that the user has started playing the video.

The second event is a buffering event, which indicates that the video is buffering.

The third event is a play event, which indicates that the video has finished playing.

Not so insightful. Obviously some more work was needed to get the results we were hoping for, so we began the process of improving and fine tuning the prompt/question and the model’s parameters.

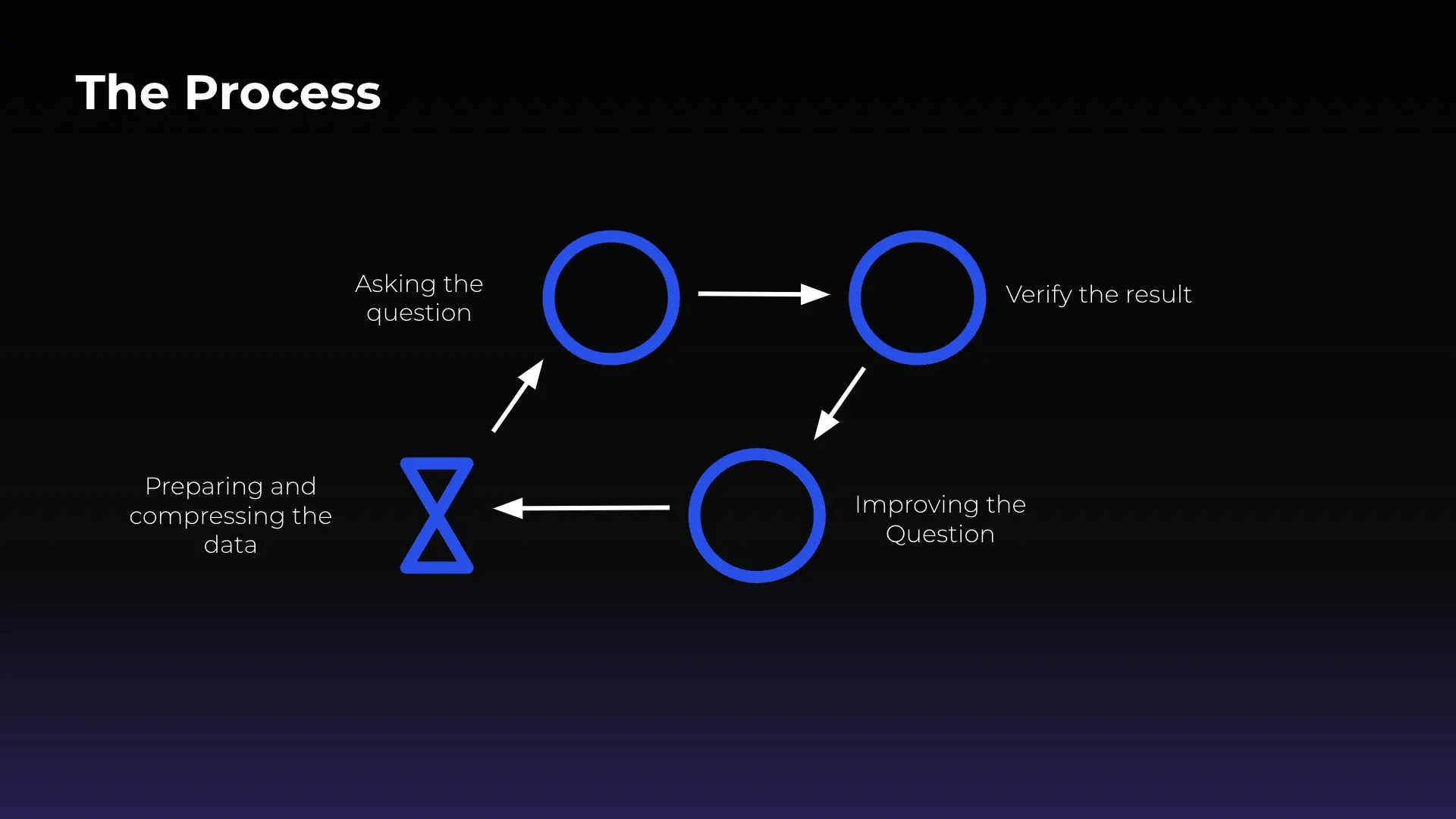

Crafting the “perfect” question

Improving the prompt to get more insightful responses was a multi-step iterative process. We asked questions and verified the accuracy of the results, leading us toward better phrasing of questions for the best outputs. This involved more clearly defining what aspects we wanted the AI to summarize and also asking it to provide recommendations for improvements. We also provided explanations of the properties that were part of the analytics session data and added context about certain metrics, including thresholds and ideal values and ranges for specific metrics (i.e. what is considered a good video startup time). Part of this included descriptions of how these various metrics would impact the viewer experience. We also learned it was better to structure the analytics data in JSON format to make it easier for the AI to interpret.

In the end, our “perfect” question grew to ~150 lines long!

Summary, analysis, and recommend solutions

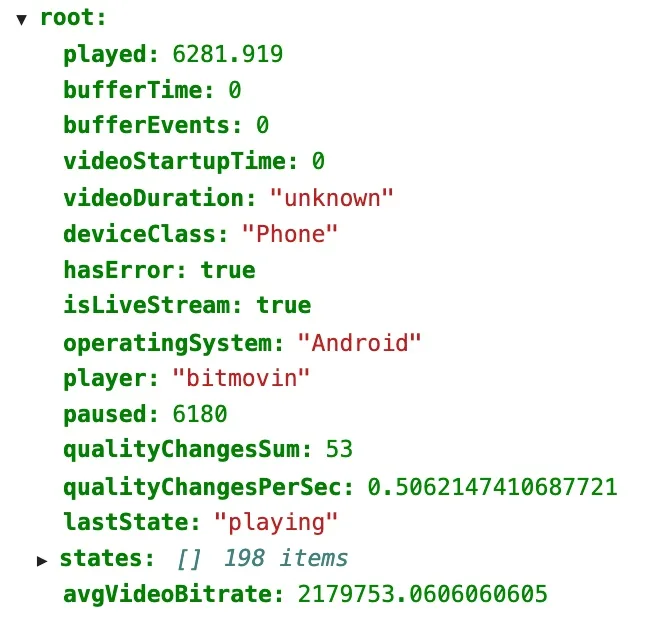

Here is an example of Analytics session data input and the more useful output we were seeing after refining the question and contextual data:

Session Data

Summarization

The user watched a live stream for 6281 seconds (1.7 hours).

The duration of the live event is unknown.

The video was not paused and there were no buffering events. However, there were 53 quality changes during the session. This could indicate that the network connection was unstable or that the ABR logic was not working properly. The average bitrate of the video was 2179753 bps. This is a relatively high bitrate, which could indicate that the video was of high quality.

Analysis

The video quality changed 53 times, which is a lot. This high number of quality changes in this session could be due to a number of factors. First, the network connection of the user may have been unstable, which could have caused the video to switch between different bitrates in order to maintain a smooth playback experience. Second, the ABR logic of the video player may not have been working properly, which could have also caused the video to switch between different bitrates unnecessarily. The lack of buffer events and buffer time in this session is a good sign. This means that the video did not experience any buffering issues, which would have been a major inconvenience for the user.

Recommendation

To improve the user experience, it would be helpful to investigate the cause of the quality changes. If the network connection is unstable, the user could try using a different network or connecting to a different location. If the ABR logic is set too aggressively, the user could try adjusting the settings. If the video is encoded inefficiently, the video could be re-encoded using a more efficient codec.

User-centric insights

Beyond the text analysis and recommendations, we experimented with other potential options for providing more accessible and concise summaries of viewing sessions. This included engagement, quality of service and quality of experience scores and a “5 star” system that created an aggregate of all metrics into a single rating.

JSON response with scores

{

‘engagement’: 7,

‘qualityOfServiceScore’: 9,

‘qualityOfExperienceScore’: 9

}

5 star rating examples

RATING: 1

Explanation: The video session has a low rating because the buffering time is high. This could be due to a number of factors, such as a slow internet connection or a lack of available bandwidth. The session also has a high buffer event count, which indicates that the user experienced multiple buffering interruptions. This can be a very disruptive experience for users, as it can break the flow of the video and lead to frustration. Finally, the video startup time is also high, which could indicate that the video was not optimized for the user’s device. Overall, this video session has a number of issues that could negatively impact the user experience.

RATING: 5

Explanation: This session had a good video startup time and low buffering time. The quality change rate was also good. The only downside is that the user paused the video for a long time, which may have impacted their engagement with the content.

Audience-specific session analysis

Another potential option for responses is to cater the information for specific personas with a variety of technical backgrounds, objectives and motivations when it comes to analytics data. You can imagine how a software developer, operations technician, product manager and sales or finance executive might want different levels of summarization and areas of focus when it comes to playback issues and how their own goals may be impacted. Just for fun here’s an example session summary that was generated using the voice of a teenager (which may be more accurate for a teenager from the early 2000s rather than today).

“Teenager” session analysis:

“Yo, check it out. This dude watched a live stream on his phone for over an hour. That’s crazy! He must have been really into it. The video quality was pretty good, and there were no buffering issues. But then, after about 40 minutes, the video just stopped working. The dude was pissed. He tried to restart the app, but it didn’t work. He even tried to delete and reinstall the app, but that didn’t work either. So he gave up and just went to bed.

I don’t know what happened, but it’s a bummer that the video stopped working. I’m sure the dude was really enjoying it. But at least he got to watch it for a while.“

Coming soon: Bitmovin Analytics AI Session Interpreter

Since the initial hackathon project, we’ve continued fine-tuning the process while doing the background work for connecting our APIs to make the Analytics AI Session Interpreter a reality for Bitmovin Analytics customers. The good news is that most of the work is done and it will be publicly available soon!

We know that many companies are sensitive to having their data used by AI models, so we are ensuring:

- Customers will need to explicitly enable this feature in their dashboard. Without that permission, no data will ever be sent to AI services.

- No customer or user identifiable information will be sent to AI services.

- Only encrypted data will be sent to AI services.

- AI services will only retain data for the time needed to generate the output.

Sign up today to get started with a free trial of Bitmovin and prepare for supercharging your data and insights with our Analytics AI Session Interpreter!

Related resources and links

Website: Learn more about Bitmovin Analytics

Docs: How to set up Bitmovin Analytics

Guide: Using Bitmovin Analytics with Amazon IVS

Link: Google Vertex AI